Kaist

Korean

News

College of Engineering News

-

CXL-Based Memory Disaggregation Technology Opens U..

A KAIST team’s compute express link (CXL) provides new insights on memory disaggregation and ensures direct access and high-performance capabilities A team from the Computer Architecture and Memory Systems Laboratory (CAMEL) at KAIST presented a new compute express link (CXL) solution whose directly accessible, and high-performance memory disaggregation opens new directions for big data memory processing. Professor Myoungsoo Jung said the team’s technology significantly improves performance compared to existing remote direct memory access (RDMA)-based memory disaggregation. CXL is a peripheral component interconnect-express (PCIe)-based new dynamic multi-protocol made for efficiently utilizing memory devices and accelerators. Many enterprise data centers and memory vendors are paying attention to it as the next-generation multi-protocol for the era of big data. Emerging big data applications such as machine learning, graph analytics, and in-memory databases require large memory capacities. However, scaling out the memory capacity via a prior memory interface like double data rate (DDR) is limited by the number of the central processing units (CPUs) and memory controllers. Therefore, memory disaggregation, which allows connecting a host to another host’s memory or memory nodes, has appeared. RDMA is a way that a host can directly access another host’s memory via InfiniBand, the commonly used network protocol in data centers. Nowadays, most existing memory disaggregation technologies employ RDMA to get a large memory capacity. As a result, a host can share another host’s memory by transferring the data between local and remote memory. Although RDMA-based memory disaggregation provides a large memory capacity to a host, two critical problems exist. First, scaling out the memory still needs an extra CPU to be added. Since passive memory such as dynamic random-access memory (DRAM), cannot operate by itself, it should be controlled by the CPU. Second, redundant data copies and software fabric interventions for RDMA-based memory disaggregation cause longer access latency. For example, remote memory access latency in RDMA-based memory disaggregation is multiple orders of magnitude longer than local memory access. To address these issues, Professor Jung’s team developed the CXL-based memory disaggregation framework, including CXL-enabled customized CPUs, CXL devices, CXL switches, and CXL-aware operating system modules. The team’s CXL device is a pure passive and directly accessible memory node that contains multiple DRAM dual inline memory modules (DIMMs) and a CXL memory controller. Since the CXL memory controller supports the memory in the CXL device, a host can utilize the memory node without processor or software intervention. The team’s CXL switch enables scaling out a host’s memory capacity by hierarchically connecting multiple CXL devices to the CXL switch allowing more than hundreds of devices. Atop the switches and devices, the team’s CXL-enabled operating system removes redundant data copy and protocol conversion exhibited by conventional RDMA, which can significantly decrease access latency to the memory nodes. In a test comparing loading 64B (cacheline) data from memory pooling devices, CXL-based memory disaggregation showed 8.2 times higher data load performance than RDMA-based memory disaggregation and even similar performance to local DRAM memory. In the team’s evaluations for a big data benchmark such as a machine learning-based test, CXL-based memory disaggregation technology also showed a maximum of 3.7 times higher performance than prior RDMA-based memory disaggregation technologies. “Escaping from the conventional RDMA-based memory disaggregation, our CXL-based memory disaggregation framework can provide high scalability and performance for diverse datacenters and cloud service infrastructures,” said Professor Jung. He went on to stress, “Our CXL-based memory disaggregation research will bring about a new paradigm for memory solutions that will lead the era of big data.” < Figure 1. a comparison of the architecture between CAMEL’s CXL solution and conventional RDMA-based memory disaggregation. > < Figure 2. A performance comparison between CAMEL’s CXL solution and prior RDMA-based disaggregation. > -Profile: Professor Myoungsoo Jung Computer Architecture and Memory Systems Laboratory (CAMEL) http://camelab.org School of Electrical Engineering KAIST

-

KAA Recognizes 4 Distinguished Alumni of the Year

< Distinguished Professor Sukbok Chang, Hyunshil Ahn at the AI Economy Institute at the Korea Economic Daily, PSTech CEO Hwan-ho Sung, Samsung Electrocnis President Hark Kyu Park (from left) > The KAIST Alumni Association (KAA) recognized four distinguished alumni of the year during a ceremony on February 25 in Seoul. The four Distinguished Alumni Awardees are Distinguished Professor Sukbok Chang from the KAIST Department of Chemistry, Hyunshil Ahn, head of the AI Economy Institute and an editorial writer at The Korea Economic Daily, CEO Hwan-ho Sung of PSTech, and President Hark Kyu Park of Samsung Electronics. Distinguished Professor Sukbok Chang who received his MS from the Department of Chemistry in 1985 has been a pioneer in the novel field of ‘carbon-hydrogen bond activation reactions’. He has significantly contributed to raising Korea’s international reputation in natural sciences and received the Kyungam Academic Award in 2013, the 14th Korea Science Award in 2015, the 1st Science and Technology Prize of Korea Toray in 2018, and the Best Scientist/Engineer Award Korea in 2019. Furthermore, he was named as a Highly Cited Researcher who ranked in the top 1% of citations by field and publication year in the Web of Science citation index for seven consecutive years from 2015 to 2021, demonstrating his leadership as a global scholar. Hyunshil Ahn, a graduate of the School of Business and Technology Management with an MS in 1985 and a PhD in 1987, was appointed as the first head of the AI Economy Institute when The Korea Economic Daily was the first Korean media outlet to establish an AI economy lab. He has contributed to creating new roles for the press and media in the 4th industrial revolution, and added to the popularization of AI technology through regulation reform and consulting on industrial policies. PSTech CEO Hwan-ho Sung is a graduate of the School of Electrical Engineering where he received an MS in 1988 and a PhD in EMBA in 2008. He has run the electronics company PSTech for over 20 years and successfully localized the production of power equipment, which previously depended on foreign technology. His development of the world’s first power equipment that can be applied to new industries including semiconductors and displays was recognized through this award. Samsung Electronics President Hark Kyu Park graduated from the School of Business and Technology Management with an MS in 1986. He not only enhanced Korea’s national competitiveness by expanding the semiconductor industry, but also established contract-based semiconductor departments at Korean universities including KAIST, Sungkyunkwan University, Yonsei University, and Postech, and semiconductor track courses at KAIST, Sogang University, Seoul National University, and Postech to nurture professional talents. He also led the national semiconductor coexistence system by leading private sector-government-academia collaborations to strengthen competence in semiconductors, and continues to make unconditional investments in strong small businesses. KAA President Chilhee Chung said, “Thanks to our alumni contributing at the highest levels of our society, the name of our alma mater shines brighter. As role models for our younger alumni, I hope greater honours will follow our awardees in the future.”

-

Decoding Brain Signals to Control a Robotic Arm

Advanced brain-machine interface system successfully interprets arm movement directions from neural signals in the brain < Figure:Experimental paradigm. Subjects were instructed to perform reach-and-grasp movements to designate the locations of the target in three-dimensional space. (a) Subjects A and B were provided the visual cue as a real tennis ball at one of four pseudo-randomized locations. (b) Subjects A and B were provided the visual cue as a virtual reality clip showing a sequence of five stages of a reach-and-grasp movement. > Researchers have developed a mind-reading system for decoding neural signals from the brain during arm movement. The method, described in the journal Applied Soft Computing, can be used by a person to control a robotic arm through a brain-machine interface (BMI). A BMI is a device that translates nerve signals into commands to control a machine, such as a computer or a robotic limb. There are two main techniques for monitoring neural signals in BMIs: electroencephalography (EEG) and electrocorticography (ECoG). The EEG exhibits signals from electrodes on the surface of the scalp and is widely employed because it is non-invasive, relatively cheap, safe and easy to use. However, the EEG has low spatial resolution and detects irrelevant neural signals, which makes it difficult to interpret the intentions of individuals from the EEG. On the other hand, the ECoG is an invasive method that involves placing electrodes directly on the surface of the cerebral cortex below the scalp. Compared with the EEG, the ECoG can monitor neural signals with much higher spatial resolution and less background noise. However, this technique has several drawbacks. “The ECoG is primarily used to find potential sources of epileptic seizures, meaning the electrodes are placed in different locations for different patients and may not be in the optimal regions of the brain for detecting sensory and movement signals,” explained Professor Jaeseung Jeong, a brain scientist at KAIST. “This inconsistency makes it difficult to decode brain signals to predict movements.” To overcome these problems, Professor Jeong’s team developed a new method for decoding ECoG neural signals during arm movement. The system is based on a machine-learning system for analysing and predicting neural signals called an ‘echo-state network’ and a mathematical probability model called the Gaussian distribution. In the study, the researchers recorded ECoG signals from four individuals with epilepsy while they were performing a reach-and-grasp task. Because the ECoG electrodes were placed according to the potential sources of each patient’s epileptic seizures, only 22% to 44% of the electrodes were located in the regions of the brain responsible for controlling movement. During the movement task, the participants were given visual cues, either by placing a real tennis ball in front of them, or via a virtual reality headset showing a clip of a human arm reaching forward in first-person view. They were asked to reach forward, grasp an object, then return their hand and release the object, while wearing motion sensors on their wrists and fingers. In a second task, they were instructed to imagine reaching forward without moving their arms. The researchers monitored the signals from the ECoG electrodes during real and imaginary arm movements, and tested whether the new system could predict the direction of this movement from the neural signals. They found that the novel decoder successfully classified arm movements in 24 directions in three-dimensional space, both in the real and virtual tasks, and that the results were at least five times more accurate than chance. They also used a computer simulation to show that the novel ECoG decoder could control the movements of a robotic arm. Overall, the results suggest that the new machine learning-based BCI system successfully used ECoG signals to interpret the direction of the intended movements. The next steps will be to improve the accuracy and efficiency of the decoder. In the future, it could be used in a real-time BMI device to help people with movement or sensory impairments. This research was supported by the KAIST Global Singularity Research Program of 2021, Brain Research Program of the National Research Foundation of Korea funded by the Ministry of Science, ICT, and Future Planning, and the Basic Science Research Program through the National Research Foundation of Korea funded by the Ministry of Education. -Publication Hoon-Hee Kim, Jaeseung Jeong, “An electrocorticographic decoder for arm movement for brain-machine interface using an echo state network and Gaussian readout,” Applied Soft Computing online December 31, 2021 (doi.org/10.1016/j.asoc.2021.108393) -Profile Professor Jaeseung Jeong Department of Bio and Brain Engineering College of Engineering KAIST

-

Five Projects Ranked in the Top 100 for National R..

< Distinguished Professor Sang Yup Lee, Professor Kwang-Hyun Cho, Professor Byungha Shin, Professor Jiyoung Eom and Professor Myungchul Kim (from left) > Five KAIST research projects were selected as the 2021 Top 100 for National R&D Excellence by the Ministry of Science and ICT and the Korea Institute of Science & Technology Evaluation and Planning. The five projects are: -The development of E. coli that proliferates with only formic acid and carbon dioxide by Distinguished Professor Sang Yup Lee from the Department of Chemical and Biomolecular Engineering -An original reverse aging technology that restores an old human skin cell into a younger one by Professor Kwang-Hyun Cho from the Department of Bio and Brain Engineering -The development of next-generation high-efficiency perovskite-silicon tandem solar cells by Professor Byungha Shin from the Department of Materials Science and Engineering -Research on the effects of ultrafine dust in the atmosphere has on energy consumption by Professor Jiyong Eom from the School of Business and Technology Management -Research on a molecular trigger that controls the phase transformation of bio materials by Professor Myungchul Kim from the Department of Bio and Brain Engineering Started in 2006, an Evaluation Committee composed of experts in industries, universities, and research institutes has made the preliminary selections of the most outstanding research projects based on their significance as a scientific and technological development and their socioeconomic effects. The finalists went through an open public evaluation. The final 100 studies are from six fields: 18 from mechanics & materials, 26 from biology & marine sciences, 19 from ICT & electronics, 10 from interdisciplinary research, and nine from natural science and infrastructure. The selected 100 studies will receive a certificate and an award plaque from the minister of MSIT as well as additional points for business and institutional evaluations according to appropriate regulations, and the selected researchers will be strongly recommended as candidates for national meritorious awards. In particular, to help the 100 selected research projects become more accessible for the general public, their main contents will be provided in a free e-book ‘The Top 100 for National R&D Excellence of 2021’ that will be available from online booksellers.

-

Improving speech intelligibility with Privacy-Pres..

Privacy-Preserving AR system can augment the speaker's speech with real-life subtitles to overcome the loss of contextual cues caused by mask-wearing and social distancing during the COVID-19 pandemic. Degraded speech intelligibility induces face-to-face conversation participants to speak louder and more distinctively, exposing the content to potential eavesdroppers. Similarly, people with face masks deteriorate their speech intelligibility, especially during the post-covid-19 crisis. Augmented Reality (AR) can serve as an effective tool to visualise people conversations and promote speech intelligibility, known as speech augmentation. However, visualised conversations without proper privacy management can expose AR users to privacy risks. An international research team of Prof. Lik-Hang LEE in the Department of Industrial and Systems Engineering at KAIST and Prof. Pan HUI in Computational Media and Arts at Hong Kong University of Science and Technology employed a conversation-oriented Contextual Integrity (CI) principle to develop a privacy-preserving AR framework for speech augmentation. At its core, the framework, namely Theophany, establishes ad-hoc social networks between relevant conversation participants to exchange contextual information and improve speech intelligibility in real-time. < Figure 1: A real-life subtitle application with AR headsets > Theophany has been implemented as a real-life subtitle application in AR to improve speech intelligibility in daily conversations (Figure 1). This implementation leverages a multi-modal channel, such as eye-tracking, camera, and audio. Theophany transforms the user's speech into text and estimates the intended recipients through gaze detection. The CI Enforcer module evaluates the sentences' sensitivity. If the sensitivity meets the speaker's privacy threshold, the sentence is transmitted to the appropriate recipients (Figure 2). < Figure 2: Multi-modal Contextual Integrity Channel > Based on the principles of Contextual Integrity (CI), parameters of privacy perception are designed for privacy-preserving face-to-face conversations, such as topic, location, and participants. Accordingly, Theophany operation depends on the topic and session. Figure 3 demonstrates several illustrative conversation sessions: (a) the topic is not sensitive and transmitted to everybody in the user's gaze. (b) the topic is work-sensitive and only transmitted to the coworker. (c) the topic is sensitive and only transmitted to the friend in the user's gaze. A new friend entering the user's gaze only gets the textual transcription once a new session (topic) starts (d). (e) the topic is highly sensitive, and nobody gets the textual transcription. < Figure 3: Speech Augmentation in five illustrative sessions > Theophany within a prototypical AR system augments the speaker's speech with real-life subtitles to overcome the loss of contextual cues caused by mask-wearing and social distancing during the COVID-19 pandemic. The research was published in ACM Multimedia under the title of 'Theophany: Multi-modal Speech Augmentation in Instantaneous Privacy Channels' (DOI: 10.1145/3474085.3475507), being selected as one of the best paper award candidates (Top 5). Note that the first author is an alumnus from the Industrial and Systems Engineering Department at KAIST. Short Bio: Lik-Hang Lee received a PhD degree from SyMLab, Hong Kong University of Science and Technology, and the Bachelor's and M.Phil. degrees from the University of Hong Kong. He is currently an assistant professor (tenure-track) with the Korea Advanced Institute of Science and Technology (KAIST), South Korea, and the head of the Augmented Reality and Media Laboratory, KAIST. He has built and designed various human-centric computing specializing in augmented and virtual realities (AR/VR). In recent years, he has published more than 30 research papers on AR/VR at prestigious conferences such as ACM WWW, ACM IMWUT, ACM Multimedia, ACM CSUR, IEEE Percom, and so on. He also serves the research community, as TPCs, PCs and workshop organizers, at some prestigious venues, such as AAAI, IJCAI, IEEE PERCOM, ACM CHI, ACM Multimedia, ACM IMWUT, IEEE VR, etc. Photo:

-

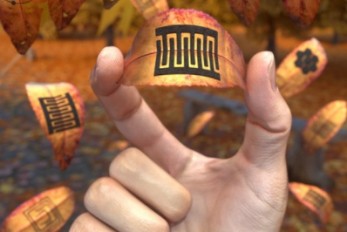

Eco-Friendly Micro-Supercapacitors Using Fallen Le..

Femtosecond micro-supercapacitors on a single leaf could easily be applied to wearable electronics, smart houses, and IoTs < Image: The schematic illustration of the production of femtosecond laser-induced graphene. > A KAIST research team has developed a graphene-inorganic-hybrid micro-supercapacitor made of leaves using femtosecond direct laser writing lithography. The advancement of wearable electronic devices is synonymous with innovations in flexible energy storage devices. Of the various energy storage devices, micro-supercapacitors have drawn a great deal of interest for their high electrical power density, long lifetimes, and short charging times. However, there has been an increase in waste battery generation with the increases in the consumption and use of electronic equipment as well as the short replacement period that follows advancements in mobile devices. The safety and environmental issues involved in the collection, recycling, and processing of such waste batteries are creating a number of challenges. Forests cover about 30 percent of the Earth’s surface, producing a huge amount of fallen leaves. This naturally occurring biomass comes in large quantities and is both biodegradable and reusable, which makes it an attractive, eco-friendly material. However, if the leaves are left neglected instead of being used efficiently, they can contribute to fires or water pollution. To solve both problems at once, a research team led by Professor Young-Jin Kim from the Department of Mechanical Engineering and Dr. Hana Yoon from the Korea Institute of Energy Research developed a one-step technology that can create porous 3D graphene micro-electrodes with high electrical conductivity without additional treatment in atmospheric conditions by irradiating femtosecond laser pulses on the surface of the leaves without additional materials. Taking this strategy further, the team also suggested a method for producing flexible micro-supercapacitors. They showed that this technique could quickly and easily produce porous graphene-inorganic-hybrid electrodes at a low price, and validated their performance by using the graphene micro-supercapacitors to power an LED and an electronic watch that could function as a thermometer, hygrometer, and timer. These results open up the possibility of the mass production of flexible and green graphene-based electronic devices. Professor Young-Jin Kim said, “Leaves create forest biomass that comes in unmanageable quantities, so using them for next-generation energy storage devices makes it possible for us to reuse waste resources, thereby establishing a virtuous cycle.” This research was published in Advanced Functional Materials last month and was sponsored by the Ministry of Agriculture Food and Rural Affairs, the Korea Forest Service, and the Korea Institute of Energy Research. -Publication Truong-Son Dinh Le, Yeong A. Lee, Han Ku Nam, Kyu Yeon Jang, Dongwook Yang, Byunggi Kim, Kanghoon Yim, Seung Woo Kim, Hana Yoon, and Young-jin Kim, “Green Flexible Graphene-Inorganic-Hybrid Micro-Supercapacitors Made of Fallen Leaves Enabled by Ultrafast Laser Pulses," December 05, 2021, Advanced Functional Materials (doi.org/10.1002/adfm.202107768) -Profile Professor Young-Jin Kim Ultra-Precision Metrology and Manufacturing (UPM2) Laboratory Department of Mechanical Engineering KAIST

-

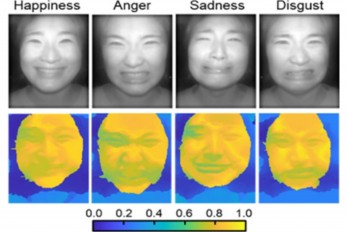

AI Light-Field Camera Reads 3D Facial Expressions

Machine-learned, light-field camera reads facial expressions from high-contrast illumination invariant 3D facial images < Image: Facial expression reading based on MLP classification from 3D depth maps and 2D images obtained by NIR-LFC > A joint research team led by Professors Ki-Hun Jeong and Doheon Lee from the KAIST Department of Bio and Brain Engineering reported the development of a technique for facial expression detection by merging near-infrared light-field camera techniques with artificial intelligence (AI) technology. Unlike a conventional camera, the light-field camera contains micro-lens arrays in front of the image sensor, which makes the camera small enough to fit into a smart phone, while allowing it to acquire the spatial and directional information of the light with a single shot. The technique has received attention as it can reconstruct images in a variety of ways including multi-views, refocusing, and 3D image acquisition, giving rise to many potential applications. However, the optical crosstalk between shadows caused by external light sources in the environment and the micro-lens has limited existing light-field cameras from being able to provide accurate image contrast and 3D reconstruction. The joint research team applied a vertical-cavity surface-emitting laser (VCSEL) in the near-IR range to stabilize the accuracy of 3D image reconstruction that previously depended on environmental light. When an external light source is shone on a face at 0-, 30-, and 60-degree angles, the light field camera reduces 54% of image reconstruction errors. Additionally, by inserting a light-absorbing layer for visible and near-IR wavelengths between the micro-lens arrays, the team could minimize optical crosstalk while increasing the image contrast by 2.1 times. Through this technique, the team could overcome the limitations of existing light-field cameras and was able to develop their NIR-based light-field camera (NIR-LFC), optimized for the 3D image reconstruction of facial expressions. Using the NIR-LFC, the team acquired high-quality 3D reconstruction images of facial expressions expressing various emotions regardless of the lighting conditions of the surrounding environment. The facial expressions in the acquired 3D images were distinguished through machine learning with an average of 85% accuracy – a statistically significant figure compared to when 2D images were used. Furthermore, by calculating the interdependency of distance information that varies with facial expression in 3D images, the team could identify the information a light-field camera utilizes to distinguish human expressions. Professor Ki-Hun Jeong said, “The sub-miniature light-field camera developed by the research team has the potential to become the new platform to quantitatively analyze the facial expressions and emotions of humans.” To highlight the significance of this research, he added, “It could be applied in various fields including mobile healthcare, field diagnosis, social cognition, and human-machine interactions.” This research was published in Advanced Intelligent Systems online on December 16, under the title, “Machine-Learned Light-field Camera that Reads Facial Expression from High-Contrast and Illumination Invariant 3D Facial Images.” This research was funded by the Ministry of Science and ICT and the Ministry of Trade, Industry and Energy. -Publication “Machine-learned light-field camera that reads fascial expression from high-contrast and illumination invariant 3D facial images,” Sang-In Bae, Sangyeon Lee, Jae-Myeong Kwon, Hyun-Kyung Kim. Kyung-Won Jang, Doheon Lee, Ki-Hun Jeong, Advanced Intelligent Systems, December 16, 2021 (doi.org/10.1002/aisy.202100182) -Profile Professor Ki-Hun Jeong Biophotonic Laboratory Department of Bio and Brain Engineering KAIST Professor Doheon Lee Department of Bio and Brain Engineering KAIST

-

Face Detection in Untrained Deep Neural Networks

A KAIST team shows that primitive visual selectivity of faces can arise spontaneously in completely untrained deep neural networks Researchers have found that higher visual cognitive functions can arise spontaneously in untrained neural networks. A KAIST research team led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering has shown that visual selectivity of facial images can arise even in completely untrained deep neural networks. This new finding has provided revelatory insights into mechanisms underlying the development of cognitive functions in both biological and artificial neural networks, also making a significant impact on our understanding of the origin of early brain functions before sensory experiences. The study published in Nature Communications on December 16 demonstrates that neuronal activities selective to facial images are observed in randomly initialized deep neural networks in the complete absence of learning, and that they show the characteristics of those observed in biological brains. The ability to identify and recognize faces is a crucial function for social behavior, and this ability is thought to originate from neuronal tuning at the single or multi-neuronal level. Neurons that selectively respond to faces are observed in young animals of various species, and this raises intense debate whether face-selective neurons can arise innately in the brain or if they require visual experience. Using a model neural network that captures properties of the ventral stream of the visual cortex, the research team found that face-selectivity can emerge spontaneously from random feedforward wirings in untrained deep neural networks. The team showed that the character of this innate face-selectivity is comparable to that observed with face-selective neurons in the brain, and that this spontaneous neuronal tuning for faces enables the network to perform face detection tasks. These results imply a possible scenario in which the random feedforward connections that develop in early, untrained networks may be sufficient for initializing primitive visual cognitive functions. Professor Paik said, “Our findings suggest that innate cognitive functions can emerge spontaneously from the statistical complexity embedded in the hierarchical feedforward projection circuitry, even in the complete absence of learning”. He continued, “Our results provide a broad conceptual advance as well as advanced insight into the mechanisms underlying the development of innate functions in both biological and artificial neural networks, which may unravel the mystery of the generation and evolution of intelligence.” This work was supported by the National Research Foundation of Korea (NRF) and by the KAIST singularity research project. -Publication Seungdae Baek, Min Song, Jaeson Jang, Gwangsu Kim, and Se-Bum Baik, “Face detection in untrained deep neural network,” Nature Communications 12, 7328 on Dec.16, 2021 (https://doi.org/10.1038/s41467-021-27606-9) -Profile Professor Se-Bum Paik Visual System and Neural Network Laboratory Program of Brain and Cognitive Engineering Department of Bio and Brain Engineering College of Engineering KAIST

-

Team KAIST to Race at CES 2022 Autonomous Challeng..

Five top university autonomous racing teams will compete in a head-to-head passing competition in Las Vegas < Team KAIST of self-driving team from the Unmanned System Research Group (USRG) advised by Professor Hyunchul Shim (second from right) > A self-driving racing team from the KAIST Unmanned System Research Group (USRG) advised by Professor Hyunchul Shim will compete at the Autonomous Challenge at the Consumer Electronic Show (CES) on January 7, 2022. The head-to-head, high speed autonomous racecar passing competition at the Las Vegas Motor Speedway will feature the finalists and semifinalists from the Indy Autonomous Challenge in October of this year. Team KAIST qualified as a semifinalist at the Indy Autonomous Challenge and will join four other university teams including the winner of the competition, Technische Universität München. Team KAIST’s AV-21 vehicle is capable of driving on its own at more than 200km/h will be expected to show a speed of more than 300 km/h at the race.The participating teams are: 1. KAIST 2. EuroRacing : University of Modena and Reggio Emilia (Italy), University of Pisa (Italy), ETH Zürich (Switzerland), Polish Academy of Sciences (Poland) 3. MIT-PITT-RW, Massachusetts Institute of Technology, University of Pittsburgh, Rochester Institute of Technology, University of Waterloo (Canada) 4.PoliMOVE – Politecnico di Milano (Italy), University of Alabama 5.TUM Autonomous Motorsport – Technische Universität München (Germany) Professor Shim’s team is dedicated to the development and validation of cutting edge technologies for highly autonomous vehicles. In recognition of his pioneering research in unmanned system technologies, Professor Shim was honored with the Grand Prize of the Minister of Science and ICT on December 9. “We began autonomous vehicle research in 2009 when we signed up for Hyundai Motor Company’s Autonomous Driving Challenge. For this, we developed a complete set of in-house technologies such as low-level vehicle control, perception, localization, and decision making.” In 2019, the team came in third place in the Challenge and they finally won this year. For years, his team has participated in many unmanned systems challenges at home and abroad, gaining recognition around the world. The team won the inaugural 2016 IROS autonomous drone racing and placed second in the 2018 IROS Autonomous Drone Racing Competition. They also competed in 2017 MBZIRC, ranking fourth in Missions 2 and 3, and fifth in the Grand Challenge. Most recently, the team won the first round of Lockheed Martin’s Alpha Pilot AI Drone Innovation Challenge. The team is now participating in the DARPA Subterranean Challenge as a member of Team CoSTAR with NASA JPL, MIT, and Caltech. “We have accumulated plenty of first-hand experience developing autonomous vehicles with the support of domestic companies such as Hyundai Motor Company, Samsung, LG, and NAVER. In 2017, the autonomous vehicle platform “EureCar” that we developed in-house was authorized by the Korean government to lawfully conduct autonomous driving experiment on public roads,” said Professor Shim. The team has developed various key technologies and algorithms related to unmanned systems that can be categorized into three major components: perception, planning, and control. Considering the characteristics of the algorithms that make up each module, their technology operates using a distributed computing system. Since 2015, the team has been actively using deep learning algorithms in the form of perception subsystems. Contextual information extracted from multi-modal sensory data gathered via cameras, lidar, radar, GPS, IMU, etc. is forwarded to the planning subsystem. The planning module is responsible for the decision making and planning required for autonomous driving such as lane change determination and trajectory planning, emergency stops, and velocity command generation. The results from the planner are fed into the controller to follow the planned high-level command. The team has also developed and verified the possibility of an end-to-end deep learning based autonomous driving approach that replaces a complex system with one single AI network.

-

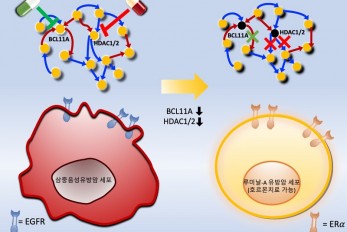

Connecting the Dots to Find New Treatments for Bre..

Systems biologists uncovered new ways of cancer cell reprogramming to treat drug-resistant cancers < Professor Kwang-Hyun Cho and colleagues have developed a mathematical model and identified optimal targets reprogramming basal-like cancer cells into hormone therapy-responsive luminal-A cells by deciphering the complex molecular interactions within these cells through a systems biological approach. > Scientists at KAIST believe they may have found a way to reverse an aggressive, treatment-resistant type of breast cancer into a less dangerous kind that responds well to treatment. The study involved the use of mathematical models to untangle the complex genetic and molecular interactions that occur in the two types of breast cancer, but could be extended to find ways for treating many others. The study’s findings were published in the journal Cancer Research. Basal-like tumours are the most aggressive type of breast cancer, with the worst prognosis. Chemotherapy is the only available treatment option, but patients experience high recurrence rates. On the other hand, luminal-A breast cancer responds well to drugs that specifically target a receptor on their cell surfaces, called estrogen receptor alpha (ERα). KAIST systems biologist Kwang-Hyun Cho and colleagues analyzed the complex molecular and genetic interactions of basal-like and luminal-A breast cancers to find out if there might be a way to switch the former to the latter and give patients a better chance to respond to treatment. To do this, they accessed large amounts of cancer and patient data to understand which genes and molecules are involved in the two types. They then input this data into a mathematical model that represents genes, proteins and molecules as dots and the interactions between them as lines. The model can be used to conduct simulations and see how interactions change when certain genes are turned on or off. “There have been a tremendous number of studies trying to find therapeutic targets for treating basal-like breast cancer patients,” says Cho. “But clinical trials have failed due to the complex and dynamic nature of cancer. To overcome this issue, we looked at breast cancer cells as a complex network system and implemented a systems biological approach to unravel the underlying mechanisms that would allow us to reprogram basal-like into luminal-A breast cancer cells.” Using this approach, followed by experimental validation on real breast cancer cells, the team found that turning off two key gene regulators, called BCL11A and HDAC1/2, switched a basal-like cancer signalling pathway into a different one used by luminal-A cancer cells. The switch reprograms the cancer cells and makes them more responsive to drugs that target ERα receptors. However, further tests will be needed to confirm that this also works in animal models and eventually humans. “Our study demonstrates that the systems biological approach can be useful for identifying novel therapeutic targets,” says Cho. The researchers are now expanding its breast cancer network model to include all breast cancer subtypes. Their ultimate aim is to identify more drug targets and to understand the mechanisms that could drive drug-resistant cells to turn into drug-sensitive ones. This work was supported by the National Research Foundation of Korea, the Ministry of Science and ICT, Electronics and Telecommunications Research Institute, and the KAIST Grand Challenge 30 Project. -Publication Sea R. Choi, Chae Young Hwang, Jonghoon Lee, and Kwang-Hyun Cho, “Network Analysis Identifies Regulators of Basal-like Breast Cancer Reprogramming and Endocrine Therapy Vulnerability,” Cancer Research, November 30. (doi:10.1158/0008-5472.CAN-21-0621) -Profile Professor Kwang-Hyun Cho Laboratory for Systems Biology and Bio-Inspired Engineering Department of Bio and Brain Engineering KAIST

-

A Team of Three PhD Candidates Wins the Korea Semi..

“We felt a sense of responsibility to help the nation advance its semiconductor design technology” < PhD candidates at the School of Electrical Engineering Yoon-Seo Cho, Sun-Eui Park, and Ju-Eun Bang (from left) > A CMOS (complementary metal-oxide semiconductor)-based “ultra-low noise signal chip” for 6G communications designed by three PhD candidates at the KAIST School of Electrical Engineering won the Presidential Award at the 22nd Korea Semiconductor Design Contest. The winners are PhD candidates Sun-Eui Park, Yoon-Seo Cho, and Ju-Eun Bang from the Integrated Circuits and System Lab run by Professor Jaehyouk Choi. The contest, which is hosted by the Ministry of Trade, Industry and Energy and the Korea Semiconductors Industry Association, is one of the top national semiconductor design contests for college students. Park said the team felt a sense of responsibility to help advance semiconductor design technology in Korea when deciding to participate the contest. The team expressed deep gratitude to Professor Choi for guiding their research on 6G communications. “Our colleagues from other labs and seniors who already graduated helped us a great deal, so we owe them a lot,” explained Park. Cho added that their hard work finally got recognized and that acknowledgement pushes her to move forward with her research. Meanwhile, Bang said she is delighted to see that many people seem to be interested in her research topic. Research for 6G is attempting to reach 1 tera bps (Tbps), 50 times faster than 5G communications with transmission speeds of up to 20 gigabytes. In general, the wider the communication frequency band, the higher the data transmission speed. Thus, the use of frequency bands above 100 gigahertz is essential for delivering high data transmission speeds for 6G communications. However, it remains a big challenge to make a precise benchmark signal that can be used as a carrier wave in a high frequency band. Despite the advantages of CMOS’s ultra-small and low-power design, it still has limitations at high frequency bands and its operating frequency. Thus, it was difficult to achieve a frequency band above 100 gigahertz. To overcome these challenges, the three students introduced ultra-low noise signal generation technology that can support high-order modulation technologies. This technology is expected to contribute to increasing the price competitiveness and density of 6G communication chips that will be used in the future. 5G just got started in 2020 and still has long way to go for full commercialization. Nevertheless, many researchers have started preparing for 6G technology, targeting 2030 since a new cellular communication appears in every other decade. Professor Choi said, “Generating ultra-high frequency signals in bands above 100 GHz with highly accurate timing is one of the key technologies for implementing 6G communication hardware. Our research is significant for the development of the world’s first semiconductor chip that will use the CMOS process to achieve noise performance of less than 80fs in a frequency band above 100 GHz.” The team members plan to work as circuit designers in Korean semiconductor companies after graduation. “We will continue to research the development of signal generators on the topic of award-winning 6G. We would like to continue our research on high-speed circuit designs such as ultra-fast analog-to-digital converters,” Park added.

-

Professor Sung-Ju Lee’s Team Wins the Best Paper a..

< Professor Sung-Ju Lee, Professor Eun Kyoung, Choe Hyunsung Cho, Daeun Choi, Donghwi Kim, Wan Ju Kang (from left) > A research team led by Professor Sung-Ju Lee at the School of Electrical Engineering won the Best Paper Award and the Methods Recognition Award from ACM CSCW (International Conference on Computer-Supported Cooperative Work and Social Computing) 2021 for their paper “Reflect, not Regret: Understanding Regretful Smartphone Use with App Feature-Level Analysis”. Founded in 1986, CSCW has been a premier conference on HCI (Human Computer Interaction) and Social Computing. This year, 340 full papers were presented and the best paper awards are given to the top 1% papers of the submitted. Methods Recognition, which is a new award, is given “for strong examples of work that includes well developed, explained, or implemented methods, and methodological innovation.” Hyunsung Cho (KAIST alumus and currently a PhD candidate at Carnegie Mellon University), Daeun Choi (KAIST undergraduate researcher), Donghwi Kim (KAIST PhD Candidate), Wan Ju Kang (KAIST PhD Candidate), and Professor Eun Kyoung Choe (University of Maryland and KAIST alumna) collaborated on this research. The authors developed a tool that tracks and analyzes which features of a mobile app (e.g., Instagram’s following post, following story, recommended post, post upload, direct messaging, etc.) are in use based on a smartphone’s User Interface (UI) layout. Utilizing this novel method, the authors revealed which feature usage patterns result in regretful smartphone use. Professor Lee said, “Although many people enjoy the benefits of smartphones, issues have emerged from the overuse of smartphones. With this feature level analysis, users can reflect on their smartphone usage based on finer grained analysis and this could contribute to digital wellbeing.” < Research achievements diagram : Application feature level usage analysis / UI LAYOUT Analysis >