Kaist

Korean

Research News

College of Engineering News

-

KAIST presents strategies for environmentally frie..

- Provides current research trends in bio-based polyamide production - Research on bio-based polyamides production gains importance for achieving a carbon-neutral society Global industries focused on carbon neutrality, under the slogan "Net-Zero," are gaining increasing attention. In particular, research on microbial production of polymers, replacing traditional chemical methods with biological approaches, is actively progressing. Polyamides, represented by nylon, are linear polymers widely used in various industries such as automotive, electronics, textiles, and medical fields. They possess beneficial properties such as high tensile strength, electrical insulation, heat resistance, wear resistance, and biocompatibility. Since the commercialization of nylon in 1938, approximately 7 million tons of polyamides are produced worldwide annually. Considering their broad applications and significance, producing polyamides through bio-based methods holds considerable environmental and industrial importance. KAIST (President Kwang-Hyung Lee) announced that a research team led by Distinguished Professor Sang Yup Lee, including Dr. Jong An Lee and doctoral candidate Ji Yeon Kim from the Department of Chemical and Biomolecular Engineering, published a paper titled "Current Advancements in Bio-Based Production of Polyamides”. The paper was featured on the cover of the monthly issue of "Trends in Chemistry” by Cell Press. As part of climate change response technologies, bio-refineries involve using biotechnological and chemical methods to produce industrially important chemicals and biofuels from renewable biomass without relying on fossil resources. Notably, systems metabolic engineering, pioneered by KAIST's Distinguished Professor Sang Yup Lee, is a research field that effectively manipulates microbial metabolic pathways to produce valuable chemicals, forming the core technology for bio-refineries. The research team has successfully developed high-performance strains producing a variety of compounds, including succinic acid, biodegradable plastics, biofuels, and natural products, using systems metabolic engineering tools and strategies. The research team predicted that if bio-based polyamide production technology, which is widely used in the production of clothing and textiles, becomes widespread, it will attract attention as a future technology that can respond to the climate crisis due to its environment-friendly production technology. In this study, the research team comprehensively reviewed the bio-based polyamide production strategies. They provided insights into the advancements in polyamide monomer production using metabolically engineered microorganisms and highlighted the recent trends in bio-based polyamide advancements utilizing these monomers. Additionally, they reviewed the strategies for synthesizing bio-based polyamides through chemical conversion of natural oils and discussed the biodegradability and recycling of the polyamides. Furthermore, the paper presented the future direction in which metabolic engineering can be applied for the bio-based polyamide production, contributing to environmentally friendly and sustainable society. Ji Yeon Kim, the co-first author of this paper from KAIST, stated "The importance of utilizing systems metabolic engineering tools and strategies for bio-based polyamides production is becoming increasingly prominent in achieving carbon neutrality." Professor Sang Yup Lee emphasized, "Amid growing concerns about climate change, the significance of environmentally friendly and sustainable industrial development is greater than ever. Systems metabolic engineering is expected to have a significant impact not only on the chemical industry but also in various fields." < [Figure 1] A schematic overview of the overall process for polyamides production > This paper by Dr. Jong An Lee, PhD student Ji Yeon Kim, Dr. Jung Ho Ahn, and Master Yeah-Ji Ahn from the Department of Chemical and Biomolecular Engineering at KAIST was published in the December issue of 'Trends in Chemistry', an authoritative review journal in the field of chemistry published by Cell. It was published on December 7 as the cover paper and featured review. ※ Paper title: Current advancements in the bio-based production of polyamides ※ Author information: Jong An Lee, Ji Yeon Kim, Jung Ho Ahn, Yeah-Ji Ahn, and Sang Yup Lee This research was conducted with the support from the development of platform technologies of microbial cell factories for the next-generation biorefineries project and C1 gas refinery program by Korean Ministry of Science and ICT. < [Figure 2] Cover paper of the December issue of Trends in Chemistry >

-

KAIST introduces eco-friendly technologies for pla..

- A research team under Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering published a paper in Nature Microbiology on the overview and trends of plastic production and degradation technology using microorganisms. - Eco-friendly and sustainable plastic production and degradation technology using microorganisms as a core technology to achieve a plastic circular economy was presented. Plastic is one of the important materials in modern society, with approximately 460 million tons produced annually and with expected production reaching approximately 1.23 billion tons in 2060. However, since 1950, plastic waste totaling more than 6.3 billion tons has been generated, and it is believed that more than 140 million tons of plastic waste has accumulated in the aquatic environment. Recently, the seriousness of microplastic pollution has emerged, not only posing a risk to the marine ecosystem and human health, but also worsening global warming by inhibiting the activity of marine plankton, which play an important role in lowering the Earth's carbon dioxide concentration. KAIST President Kwang-Hyung Lee announced on December 11 that a research team under Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering had published a paper titled 'Sustainable production and degradation of plastics using microbes', which covers the latest technologies for producing plastics and processing waste plastics in an eco-friendly manner using microorganisms. As the international community moves to solve this plastic problem, various efforts are being made, including 175 countries participating to conclude a legally binding agreement with the goal of ending plastic pollution by 2024. Various technologies are being developed for sustainable plastic production and processing, and among them, biotechnology using microorganisms is attracting attention. Microorganisms have the ability to naturally produce or decompose certain compounds, and this ability is maximized through biotechnologies such as metabolic engineering and enzyme engineering to produce plastics from renewable biomass resources instead of fossil raw materials and to decompose waste plastics. Accordingly, the research team comprehensively analyzed the latest microorganism-based technologies for the sustainable production and decomposition of plastics and presented how they actually contribute to solving the plastic problem. Based on this, they presented limitations, prospects, and research directions of the technologies for achieving a circular economy for plastics. Microorganism-based technologies for various plastics range from widely used synthetic plastics such as polyethylene (PE) to promising bioplastics such as natural polymers derived from microorganisms (polyhydroxyalkanoate (PHA)) that are completely biodegradable in the natural environment and do not pose a risk of microplastic generation. Commercialization statuses and latest technologies were also discussed. In addition, the technology to decompose these plastics using microorganisms and their enzymes and the upcycling technology to convert them into other useful compounds after decomposition were introduced, highlighting the competitiveness and potential of technology using microorganisms. First author So Young Choi, a research assistant professor in the Department of Chemical and Biomolecular Engineering at KAIST, said, “In the future, we will be able to easily find eco-friendly plastics made using microorganisms all around us,” and corresponding author Distinguished Professor Sang Yup Lee said, “Plastic can be made more sustainable. It is important to use plastics responsibly to protect the environment and simultaneously achieve economic and social development through the new plastics industry, and we look forward to the improved performance of microbial metabolic engineering technology.” This paper was published on November 30th in the online edition of Nature Microbiology. ※ Paper Title : Sustainable production and degradation of plastics using microbes Authors: So Young Choi, Youngjoon Lee, Hye Eun Yu, In Jin Cho, Minju Kang & Sang Yup Lee < Life cycle of plastics produced using microbial biotechnologies > This research was conducted with the support from the Development of Platform Technologies of Microbial Cell Factories for the Next-Generation Biorefineries Project (2022M3J5A1056117) and the Development of Platform Technology for the Production of Novel Aromatic Bioplastic Using Microbial Cell Factories Project (2022M3J4A1053699) by the Korean Ministry of Science and ICT.

-

KAIST-UCSD researchers build an enzyme discovering..

- A joint research team led by Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering and Bernhard Palsson of UCSD developed ‘DeepECtransformer’, an artificial intelligence that can predict Enzyme Commission (EC) number of proteins. - The AI is tasked to discover new enzymes that have not been discovered yet, which would allow prediction for a total of 5,360 types of Enzyme Commission (EC) numbers - It is expected to be used in the development of microbial cell factories that produce environmentally friendly chemicals as a core technology for analyzing the metabolic network of a genome. While E. coli is one of the most studied organisms, the function of 30% of proteins that make up E. coli has not yet been clearly revealed. For this, an artificial intelligence was used to discover 464 types of enzymes from the proteins that were unknown, and the researchers went on to verify the predictions of 3 types of proteins were successfully identified through in vitro enzyme assay. KAIST (President Kwang-Hyung Lee) announced on the 24th that a joint research team comprised of Gi Bae Kim, Ji Yeon Kim, Dr. Jong An Lee and Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering at KAIST, and Dr. Charles J. Norsigian and Professor Bernhard O. Palsson of the Department of Bioengineering at UCSD has developed DeepECtransformer, an artificial intelligence that can predict the enzyme functions from the protein sequence, and has established a prediction system by utilizing the AI to quickly and accurately identify the enzyme function. Enzymes are proteins that catalyze biological reactions, and identifying the function of each enzyme is essential to understanding the various chemical reactions that exist in living organisms and the metabolic characteristics of those organisms. Enzyme Commission (EC) number is an enzyme function classification system designed by the International Union of Biochemistry and Molecular Biology, and in order to understand the metabolic characteristics of various organisms, it is necessary to develop a technology that can quickly analyze enzymes and EC numbers of the enzymes present in the genome. Various methodologies based on deep learning have been developed to analyze the features of biological sequences, including protein function prediction, but most of them have a problem of a black box, where the inference process of AI cannot be interpreted. Various prediction systems that utilize AI for enzyme function prediction have also been reported, but they do not solve this black box problem, or cannot interpret the reasoning process in fine-grained level (e.g., the level of amino acid residues in the enzyme sequence). The joint team developed DeepECtransformer, an AI that utilizes deep learning and a protein homology analysis module to predict the enzyme function of a given protein sequence. To better understand the features of protein sequences, the transformer architecture, which is commonly used in natural language processing, was additionally used to extract important features about enzyme functions in the context of the entire protein sequence, which enabled the team to accurately predict the EC number of the enzyme. The developed DeepECtransformer can predict a total of 5360 EC numbers. The joint team further analyzed the transformer architecture to understand the inference process of DeepECtransformer, and found that in the inference process, the AI utilizes information on catalytic active sites and/or the cofactor binding sites which are important for enzyme function. By analyzing the black box of DeepECtransformer, it was confirmed that the AI was able to identify the features that are important for enzyme function on its own during the learning process. "By utilizing the prediction system we developed, we were able to predict the functions of enzymes that had not yet been identified and verify them experimentally," said Gi Bae Kim, the first author of the paper. "By using DeepECtransformer to identify previously unknown enzymes in living organisms, we will be able to more accurately analyze various facets involved in the metabolic processes of organisms, such as the enzymes needed to biosynthesize various useful compounds or the enzymes needed to biodegrade plastics." he added. "DeepECtransformer, which quickly and accurately predicts enzyme functions, is a key technology in functional genomics, enabling us to analyze the function of entire enzymes at the systems level," said Professor Sang Yup Lee. He added, “We will be able to use it to develop eco-friendly microbial factories based on comprehensive genome-scale metabolic models, potentially minimizing missing information of metabolism.” The joint team’s work on DeepECtransformer is described in the paper titled "Functional annotation of enzyme-encoding genes using deep learning with transformer layers" written by Gi Bae Kim, Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering of KAIST and their colleagues. The paper was published via peer-review on the 14th of November on “Nature Communications”. This research was conducted with the support by “the Development of next-generation biorefinery platform technologies for leading bio-based chemicals industry project (2022M3J5A1056072)” and by “Development of platform technologies of microbial cell factories for the next-generation biorefineries project (2022M3J5A1056117)” from National Research Foundation supported by the Korean Ministry of Science and ICT (Project Leader: Distinguished Professor Sang Yup Lee, KAIST). < Figure 1. The structure of DeepECtransformer's artificial neural network >

-

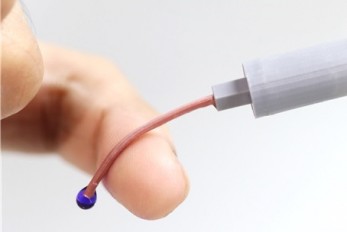

An intravenous needle that irreversibly softens vi..

- A joint research team at KAIST developed an intravenous (IV) needle that softens upon insertion, minimizing risk of damage to blood vessels and tissues. - Once used, it remains soft even at room temperature, preventing accidental needle stick injuries and unethical multiple use of needle. - A thin-film temperature sensor can be embedded with this needle, enabling real-time monitoring of the patient's core body temperature, or detection of unintended fluid leakage, during IV medication. Intravenous (IV) injection is a method commonly used in patient’s treatment worldwide as it induces rapid effects and allows treatment through continuous administration of medication by directly injecting drugs into the blood vessel. However, medical IV needles, made of hard materials such as stainless steel or plastic which do not mechanically match the soft biological tissues of the body, can cause critical problems in healthcare settings, starting from minor tissue damages in the injection sites to serious inflammations. The structure and dexterity of rigid medical IV devices also enable unethical reuse of needles for reduction of injection costs, leading to transmission of deadly blood-borne disease infections such as human immunodeficiency virus (HIV) and hepatitis B/C viruses. Furthermore, unintended needlestick injuries are frequently occurring in medical settings worldwide, that are viable sources of such infections, with IV needles having the greatest susceptibility of being the medium of transmissible diseases. For these reasons, the World Health Organization (WHO) in 2015 launched a policy on safe injection practices to encourage the development and use of “smart” syringes that have features to prevent re-use, after a tremendous increase in the number of deadly infectious disease worldwide due to medical-sharps related issues. KAIST announced on the 13th that Professor Jae-Woong Jeong and his research team of its School of Electrical Engineering succeeded in developing the Phase-Convertible, Adapting and non-REusable (P-CARE) needle with variable stiffness that can improve patient health and ensure the safety of medical staff through convergent joint research with another team led by Professor Won-Il Jeong of the Graduate School of Medical Sciences. The new technology is expected to allow patients to move without worrying about pain at the injection site as it reduces the risk of damage to the wall of the blood vessel as patients receive IV medication. This is possible with the needle’s stiffness-tunable characteristics which will make it soft and flexible upon insertion into the body due to increased temperature, adapting to the movement of thin-walled vein. It is also expected to prevent blood-borne disease infections caused by accidental needlestick injuries or unethical re-using of syringes as the deformed needle remains perpetually soft even after it is retracted from the injection site. The results of this research, in which Karen-Christian Agno, a doctoral researcher of the School of Electrical Engineering at and Dr. Keungmo Yang of the Graduate School of Medical Sciences participated as co-first authors, was published in Nature Biomedical Engineering on October 30. (Paper title: A temperature-responsive intravenous needle that irreversibly softens on insertion) < Figure 1. Disposable variable stiffness intravenous needle. (a) Conceptual illustration of the key features of the P-CARE needle whose mechanical properties can be changed by body temperature, (b) Photograph of commonly used IV access devices and the P-CARE needle, (c) Performance of common IV access devices and the P-CARE needle > “We’ve developed this special needle using advanced materials and micro/nano engineering techniques, and it can solve many global problems related to conventional medical needles used in healthcare worldwide”, said Jae-Woong Jeong, Ph.D., an associate professor of Electrical Engineering at KAIST and a lead senior author of the study. The softening IV needle created by the research team is made up of liquid metal gallium that forms the hollow, mechanical needle frame encapsulated within an ultra-soft silicone material. In its solid state, gallium has sufficient hardness that enables puncturing of soft biological tissues. However, gallium melts when it is exposed to body temperature upon insertion, and changes it into a soft state like the surrounding tissue, enabling stable delivery of the drug without damaging blood vessels. Once used, a needle remains soft even at room temperature due to the supercooling phenomenon of gallium, fundamentally preventing needlestick accidents and reuse problems. Biocompatibility of the softening IV needle was validated through in vivo studies in mice. The studies showed that implanted needles caused significantly less inflammation relative to the standard IV access devices of similar size made of metal needles or plastic catheters. The study also confirmed the new needle was able to deliver medications as reliably as commercial injection needles. < Photo 1. Photo of the P-CARE needle that softens with body temperature. > Researchers also showed possibility of integrating a customized ultra-thin temperature sensor with the softening IV needle to measure the on-site temperature which can further enhance patient’s well-being. The single assembly of sensor-needle device can be used to monitor the core body temperature, or even detect if there is a fluid leakage on-site during indwelling use, eliminating the need for additional medical tools or procedures to provide the patients with better health care services. The researchers believe that this transformative IV needle can open new opportunities for wide range of applications particularly in clinical setups, in terms of redesigning other medical needles and sharp medical tools to reduce muscle tissue injury during indwelling use. The softening IV needle may become even more valuable in the present times as there is an estimated 16 billion medical injections administered annually in a global scale, yet not all needles are disposed of properly, based on a 2018 WHO report. < Figure 2. Biocompatibility test for P-CARE needle: Images of H&E stained histology (the area inside the dashed box on the left is provided in an expanded view in the right), TUNEL staining (green), DAPI staining of nuclei (blue) and co-staining (TUNEL and DAPI) of muscle tissue from different organs. > < Figure 3. Conceptual images of potential utilization for temperature monitoring function of P-CARE needle integrated with a temperature sensor. > (a) Schematic diagram of injecting a drug through intravenous injection into the abdomen of a laboratory mouse (b) Change of body temperature upon injection of drug (c) Conceptual illustration of normal intravenous drug injection (top) and fluid leakage (bottom) (d) Comparison of body temperature during normal drug injection and fluid leakage: when the fluid leak occur due to incorrect insertion, a sudden drop of temperature is detected. This work was supported by grants from the National Research Foundation of Korea (NRF) funded by the Ministry of Science and ICT.

-

KAIST proposes alternatives to chemical factories ..

- A computer simulation program “iBridge” was developed at KAIST that can put together microbial cell factories quickly and efficiently to produce cosmetics and food additives, and raw materials for nylons - Eco-friendly and sustainable fermentation process to establish an alternative to chemical plants As climate change and environmental concerns intensify, sustainable microbial cell factories garner significant attention as candidates to replace chemical plants. To develop microorganisms to be used in the microbial cell factories, it is crucial to modify their metabolic processes to induce efficient target chemical production by modulating its gene expressions. Yet, the challenge persists in determining which gene expressions to amplify and suppress, and the experimental verification of these modification targets is a time- and resource-intensive process even for experts. The challenges were addressed by a team of researchers at KAIST (President Kwang-Hyung Lee) led by Distinguished Professor Sang Yup Lee. It was announced on the 9th by the school that a method for building a microbial factory at low cost, quickly and efficiently, was presented by a novel computer simulation program developed by the team under Professor Lee’s guidance, which is named “iBridge”. This innovative system is designed to predict gene targets to either overexpress or downregulate in the goal of producing a desired compound to enable the cost-effective and efficient construction of microbial cell factories specifically tailored for producing the chemical compound in demand from renewable biomass. Systems metabolic engineering is a field of research and engineering pioneered by KAIST’s Distinguished Professor Sang Yup Lee that seeks to produce valuable compounds in industrial demands using microorganisms that are re-configured by a combination of methods including, but not limited to, metabolic engineering, synthetic biology, systems biology, and fermentation engineering. In order to improve microorganisms’ capability to produce useful compounds, it is essential to delete, suppress, or overexpress microbial genes. However, it is difficult even for the experts to identify the gene targets to modify without experimental confirmations for each of them, which can take up immeasurable amount of time and resources. The newly developed iBridge identifies positive and negative metabolites within cells, which exert positive and/or negative impact on formation of the products, by calculating the sum of covariances of their outgoing (consuming) reaction fluxes for a target chemical. Subsequently, it pinpoints "bridge" reactions responsible for converting negative metabolites into positive ones as candidates for overexpression, while identifying the opposites as targets for downregulation. The research team successfully utilized the iBridge simulation to establish E. coli microbial cell factories each capable of producing three of the compounds that are in high demands at a production capacity that has not been reported around the world. They developed E. coli strains that can each produce panthenol, a moisturizing agent found in many cosmetics, putrescine, which is one of the key components in nylon production, and 4-hydroxyphenyllactic acid, an anti-bacterial food additive. In addition to these three compounds, the study presents predictions for overexpression and suppression genes to construct microbial factories for 298 other industrially valuable compounds. Dr. Youngjoon Lee, the co-first author of this paper from KAIST, emphasized the accelerated construction of various microbial factories the newly developed simulation enabled. He stated, "With the use of this simulation, multiple microbial cell factories have been established significantly faster than it would have been using the conventional methods. Microbial cell factories producing a wider range of valuable compounds can now be constructed quickly using this technology." Professor Sang Yup Lee said, "Systems metabolic engineering is a crucial technology for addressing the current climate change issues." He added, "This simulation could significantly expedite the transition from resorting to conventional chemical factories to utilizing environmentally friendly microbial factories." < Figure. Conceptual diagram of the flow of iBridge simulation > The team’s work on iBridge is described in a paper titled "Genome-Wide Identification of Overexpression and Downregulation Gene Targets Based on the Sum of Covariances of the Outgoing Reaction Fluxes" written by Dr. Won Jun Kim, and Dr. Youngjoon Lee of the Bioprocess Research Center and Professors Hyun Uk Kim and Sang Yup Lee of the Department of Chemical and Biomolecular Engineering of KAIST. The paper was published via peer-review on the 6th of November on “Cell Systems” by Cell Press. This research was conducted with the support from the Development of Platform Technologies of Microbial Cell Factories for the Next-generation Biorefineries Project (Project Leader: Distinguished Professor Sang Yup Lee, KAIST) and Development of Platform Technology for the Production of Novel Aromatic Bioplastic using Microbial Cell Factories Project (Project Leader: Research Professor So Young Choi, KAIST) of the Korean Ministry of Science and ICT.

-

KAIST researchers find sleep delays more prevalent..

Sleep has a huge impact on health, well-being and productivity, but how long and how well people sleep these days has not been accurately reported. Previous research on how much and how well we sleep has mostly relied on self-reports or was confined within the data from the unnatural environments of the sleep laboratories. So, the questions remained: Is the amount and quality of sleep purely a personal choice? Could they be independent from social factors such as culture and geography? < From left to right, Sungkyu Park of Kangwon National University, South Korea; Assem Zhunis of KAIST and IBS, South Korea; Marios Constantinides of Nokia Bell Labs, UK; Luca Maria Aiello of the IT University of Copenhagen, Denmark; Daniele Quercia of Nokia Bell Labs and King's College London, UK; and Meeyoung Cha of IBS and KAIST, South Korea > A new study led by researchers at Korea Advanced Institute of Science and Technology (KAIST) and Nokia Bell Labs in the United Kingdom investigated the cultural and individual factors that influence sleep. In contrast to previous studies that relied on surveys or controlled experiments at labs, the team used commercially available smartwatches for extensive data collection, analyzing 52 million logs collected over a four-year period from 30,082 individuals in 11 countries. These people wore Nokia smartwatches, which allowed the team to investigate country-specific sleep patterns based on the digital logs from the devices. < Figure comparing survey and smartwatch logs on average sleep-time, wake-time, and sleep durations. Digital logs consistently recorded delayed hours of wake- and sleep-time, resulting in shorter sleep durations. > Digital logs collected from the smartwatches revealed discrepancies in wake-up times and sleep-times, sometimes by tens of minutes to an hour, from the data previously collected from self-report assessments. The average sleep-time overall was calculated to be around midnight, and the average wake-up time was 7:42 AM. The team discovered, however, that individuals' sleep is heavily linked to their geographical location and cultural factors. While wake-up times were similar, sleep-time varied by country. Individuals in higher GDP countries had more records of delayed bedtime. Those in collectivist culture, compared to individualist culture, also showed more records of delayed bedtime. Among the studied countries, Japan had the shortest total sleep duration, averaging a duration of under 7 hours, while Finland had the longest, averaging 8 hours. Researchers calculated essential sleep metrics used in clinical studies, such as sleep efficiency, sleep duration, and overslept hours on weekends, to analyze the extensive sleep patterns. Using Principal Component Analysis (PCA), they further condensed these metrics into two major sleep dimensions representing sleep quality and quantity. A cross-country comparison revealed that societal factors account for 55% of the variation in sleep quality and 63% of the variation in sleep quantity. Countries with a higher individualism index (IDV), which placed greater emphasis on individual achievements and relationships, had significantly longer sleep durations, which could be attributed to such societies having a norm of going to bed early. Spain and Japan, on the other hand, had the bedtime scheduled at the latest hours despite having the highest collectivism scores (low IDV). The study also discovered a moderate relationship between a higher uncertainty avoidance index (UAI), which measures implementation of general laws and regulation in daily lives of regular citizens, and better sleep quality. Researchers also investigated how physical activity can affect sleep quantity and quality to see if individuals can counterbalance cultural influences through personal interventions. They discovered that increasing daily activity can improve sleep quality in terms of shortened time needed in falling asleep and waking up. Individuals who exercise more, however, did not sleep longer. The effect of exercise differed by country, with more pronounced effects observed in some countries, such as the United States and Finland. Interestingly, in Japan, no obvious effect of exercise could be observed. These findings suggest that the relationship between daily activity and sleep may differ by country and that different exercise regimens may be more effective in different cultures. This research published on the Scientific Reports by the international journal, Nature, sheds light on the influence of social factors on sleep. (Paper Title "Social dimensions impact individual sleep quantity and quality" Article number: 9681) One of the co-authors, Daniele Quercia, commented: “Excessive work schedules, long working hours, and late bedtime in high-income countries and social engagement due to high collectivism may cause bedtimes to be delayed.” Commenting on the research, the first author Shaun Sungkyu Park said, "While it is intriguing to see that a society can play a role in determining the quantity and quality of an individual's sleep with large-scale data, the significance of this study is that it quantitatively shows that even within the same culture (country), individual efforts such as daily exercise can have a positive impact on sleep quantity and quality." "Sleep not only has a great impact on one’s well-being but it is also known to be associated with health issues such as obesity and dementia," said the lead author, Meeyoung Cha. "In order to ensure adequate sleep and improve sleep quality in an aging society, not only individual efforts but also a social support must be provided to work together," she said. The research team will contribute to the development of the high-tech sleep industry by making a code that easily calculates the sleep indicators developed in this study available free of charge, as well as providing the benchmark data for various types of sleep research to follow.

-

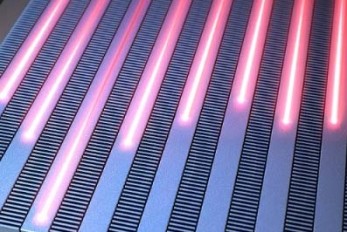

A KAIST research team unveils new path for dense p..

Integrated optical semiconductor (hereinafter referred to as optical semiconductor) technology is a next-generation semiconductor technology for which many researches and investments are being made worldwide because it can make complex optical systems such as LiDAR and quantum sensors and computers into a single small chip. In the existing semiconductor technology, the key was how small it was to make it in units of 5 nanometers or 2 nanometers, but increasing the degree of integration in optical semiconductor devices can be said to be a key technology that determines performance, price, and energy efficiency. KAIST (President Kwang-Hyung Lee) announced on the 19th that a research team led by Professor Sangsik Kim of the Department of Electrical and Electronic Engineering discovered a new optical coupling mechanism that can increase the degree of integration of optical semiconductor devices by more than 100 times. The degree of the number of elements that can be configured per chip is called the degree of integration. However, it is very difficult to increase the degree of integration of optical semiconductor devices, because crosstalk occurs between photons between adjacent devices due to the wave nature of light. In previous studies, it was possible to reduce crosstalk of light only in specific polarizations, but in this study, the research team developed a method to increase the degree of integration even under polarization conditions, which were previously considered impossible, by discovering a new light coupling mechanism. This study, led by Professor Sangsik Kim as a corresponding author and conducted with students he taught at Texas Tech University, was published in the international journal 'Light: Science & Applications' [IF=20.257] on June 2nd. done. (Paper title: Anisotropic leaky-like perturbation with subwavelength gratings enables zero crosstalk). Professor Sangsik Kim said, "The interesting thing about this study is that it paradoxically eliminated the confusion through leaky waves (light tends to spread sideways), which was previously thought to increase the crosstalk." He went on to add, “If the optical coupling method using the leaky wave revealed in this study is applied, it will be possible to develop various optical semiconductor devices that are smaller and that has less noise.” Professor Sangsik Kim is a researcher recognized for his expertise and research in optical semiconductor integration. Through his previous research, he developed an all-dielectric metamaterial that can control the degree of light spreading laterally by patterning a semiconductor structure at a size smaller than the wavelength, and proved this through experiments to improve the degree of integration of optical semiconductors. These studies were reported in ‘Nature Communications’ (Vol. 9, Article 1893, 2018) and ‘Optica’ (Vol. 7, pp. 881-887, 2020). In recognition of these achievements, Professor Kim has received the NSF Career Award from the National Science Foundation (NSF) and the Young Scientist Award from the Association of Korean-American Scientists and Engineers. Meanwhile, this research was carried out with the support from the New Research Project of Excellence of the National Research Foundation of Korea and and the National Science Foundation of the US. < Figure 1. Illustration depicting light propagation without crosstalk in the waveguide array of the developed metamaterial-based optical semiconductor >

-

A KAIST research team develops a high-performance ..

In recent years, there has been a rise in demand for large amounts of data to train AI models and, thus, data size has become increasingly important over time. Accordingly, solid state drives (SSDs, storage devices that use a semiconductor memory unit), which are core storage devices for data centers and cloud services, have also seen an increase in demand. However, the internal components of higher performing SSDs have become more tightly coupled, and this tightly-coupled structure limits SSD from maximized performance. On June 15, a KAIST research team led by Professor Dongjun Kim (John Kim) from the School of Electrical Engineering (EE) announced the development of the first SSD system semiconductor structure that can increase the reading/writing performance of next generation SSDs and extend their lifespan through high-performance modular SSD systems. Professor Kim’s team identified the limitations of the tightly-coupled structures in existing SSD designs and proposed a de-coupled structure that can maximize SSD performance by configuring an internal on-chip network specialized for flash memory. This technique utilizes on-chip network technology, which can freely send packet-based data within the chip and is often used to design non-memory system semiconductors like CPUs and GPUs. Through this, the team developed a ‘modular SSD’, which shows reduced interdependence between front-end and back-end designs, and allows their independent design and assembly. *on-chip network: a packet-based connection structure for the internal components of system semiconductors like CPUs/GPUs. On-chip networks are one of the most critical design components for high-performing system semiconductors, and their importance grows with the size of the semiconductor chip. Professor Kim’s team refers to the components nearer to the CPU as the front-end and the parts closer to the flash memory as back-end. They newly constructed an on-chip network specific to flash memory in order to allow data transmission between the back-end’s flash controller, proposing a de-coupled structure that can minimize performance drop. The SSD can accelerate some functions of the flash translation layer, a critical element to drive the SSD, in order to allow flash memory to actively overcome its limitations. Another advantage of the de-coupled, modular structure is that the flash translation layer is not limited to the characteristics of specific flash memories. Instead, their front-end and back-end designs can be carried out independently. Through this, the team could produce 21-times faster response times compared to existing systems and extend SSD lifespan by 23% by also applying the DDS defect detection technique. < Figure 1. Schematic diagram of the structure of a high-performance modular SSD system developed by Professor Dong-Jun Kim's team > This research, conducted by first author and Ph.D. candidate Jiho Kim from the KAIST School of EE and co-author Professor Myoungsoo Jung, was presented on the 19th of June at the 50th IEEE/ACM International Symposium on Computer Architecture, the most prestigious academic conference in the field of computer architecture, held in Orlando, Florida. (Paper Title: Decoupled SSD: Rethinking SSD Architecture through Network-based Flash Controllers) < Figure 2. Conceptual diagram of hardware acceleration through high-performance modular SSD system > Professor Dongjun Kim, who led the research, said, “This research is significant in that it identified the structural limitations of existing SSDs, and showed that on-chip network technology based on system memory semiconductors like CPUs can drive the hardware to actively carry out the necessary actions. We expect this to contribute greatly to the next-generation high-performance SSD market.” He added, “The de-coupled architecture is a structure that can actively operate to extend devices’ lifespan. In other words, its significance is not limited to the level of performance and can, therefore, be used for various applications.” KAIST commented that this research is also meaningful in that the results were reaped through a collaborative study between two world-renowned researchers: Professor Myeongsoo Jung, recognized in the field of computer system storage devices, and Professor Dongjun Kim, a leading researcher in computer architecture and interconnection networks. This research was funded by the National Research Foundation of Korea, Samsung Electronics, the IC Design Education Center, and Next Generation Semiconductor Technology and Development granted by the Institute of Information & Communications Technology, Planning & Evaluation.

-

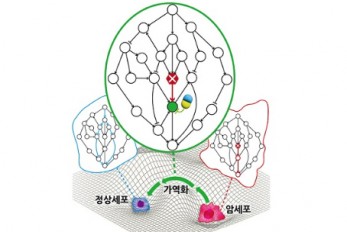

A KAIST Research Team Identifies a Cancer Reversio..

Despite decades of intensive cancer research by numerous biomedical scientists, cancer still holds its place as the number one cause of death in Korea. The fundamental reason behind the limitations of current cancer treatment methods is the fact that they all aim to completely destroy cancer cells, which eventually allows the cancer cells to acquire immunity. In other words, recurrences and side-effects caused by the destruction of healthy cells are inevitable. To this end, some have suggested anticancer treatment methods based on cancer reversion, which can revert cancer cells back to normal or near-normal cells under certain conditions. However, the practical development of this idea has not yet been attempted. On June 8, a KAIST research team led by Professor Kwang-Hyun Cho from the Department of Bio and Brain Engineering reported to have successfully identified the fundamental principle of a process that can revert cancer cells back to normal cells without killing the cells. Professor Cho’s team focused on the fact that unlike normal cells, which react according to external stimuli, cancer cells tend to ignore such stimuli and only undergo uncontrolled cell division. Through computer simulation analysis, the team discovered that the input-output (I/O) relationships that were distorted by genetic mutations could be reverted back to normal I/O relationships under certain conditions. The team then demonstrated through molecular cell experiments that such I/O relationship recovery also occurred in real cancer cells. The results of this study, written by Dr. Jae Il Joo and Dr. Hwa-Jeong Park, were published in Wiley’s Advanced Science online on June 2 under the title, "Normalizing input-output relationships of cancer networks for reversion therapy.“ < Image 1. Input-output (I/O) relationships in gene regulatory networks > Professor Kwang-Hyun Cho's research team classified genes into four types by simulation-analyzing the effect of gene mutations on the I/O relationship of gene regulatory networks. (Figure A-J) In addition, by analyzing 18 genes of the cancer-related gene regulatory network, it was confirmed that when mutations occur in more than half of the genes constituting each network, reversibility is possible through appropriate control. (Figure K) Professor Cho’s team uncovered that the reason the distorted I/O relationships of cancer cells could be reverted back to normal ones was the robustness and redundancy of intracellular gene control networks that developed over the course of evolution. In addition, they found that some genes were more promising as targets for cancer reversion than others, and showed through molecular cell experiments that controlling such genes could revert the distorted I/O relationships of cancer cells back to normal ones. < Image 2. Simulation results of restoration of bladder cancer gene regulation network and I/O relationship of bladder cancer cells. > The research team classified the effects of gene mutations on the I/O relationship in the bladder cancer gene regulation network by simulation analysis and classified them into 4 types. (Figure A) Through this, it was found that the distorted input-output relationship between bladder cancer cell lines KU-1919 and HCT-1197 could be restored to normal. (Figure B) < Image 3. Analysis of survival of bladder cancer patients according to reversible gene mutation and I/O recovery experiment of bladder cancer cells. > As predicted through network simulation analysis, Professor Kwang-Hyun Cho's research team confirmed through molecular cell experiments that the response to TGF-b was normally restored when AKT and MAP3K1 were inhibited in the bladder cancer cell line KU-1919. (Figure A-G) In addition, it was confirmed that there is a difference in the survival rate of bladder cancer patients depending on the presence or absence of a reversible gene mutation. (Figure H) The results of this research show that the reversion of real cancer cells does not happen by chance, and that it is possible to systematically explore targets that can induce this phenomenon, thereby creating the potential for the development of innovative anticancer drugs that can control such target genes. < Image 4. Cancer cell reversibility principle > The research team analyzed the reversibility, redundancy, and robustness of various networks and found that there was a positive correlation between them. From this, it was found that reversibility was additionally inherent in the process of evolution in which the gene regulatory network acquired redundancy and consistency. Professor Cho said, “By uncovering the fundamental principles of a new cancer reversion treatment strategy that may overcome the unresolved limitations of existing chemotherapy, we have increased the possibility of developing new and innovative drugs that can improve both the prognosis and quality of life of cancer patients.” < Image 5. Conceptual diagram of research results > The research team identified the fundamental control principle of cancer cell reversibility through systems biology research. When the I/O relationship of the intracellular gene regulatory network is distorted by mutation, the distorted I/O relationship can be restored to a normal state by identifying and adjusting the reversible gene target based on the redundancy of the molecular circuit inherent in the complex network. After Professor Cho’s team first suggested the concept of reversion treatment, they published their results for reverting colorectal cancer in January 2020, and in January 2022 they successfully re-programmed malignant breast cancer cells back into hormone-treatable ones. In January 2023, the team successfully removed the metastasis ability from lung cancer cells and reverted them back to a state that allowed improved drug reactivity. However, these results were case studies of specific types of cancer and did not reveal what common principle allowed cancer reversion across all cancer types, making this the first revelation of the general principle of cancer reversion and its evolutionary origins. This research was funded by the Ministry of Science and ICT of the Republic of Korea and the National Research Foundation of Korea.

-

Synthetic sRNAs to knockdown genes in medical and ..

Bacteria are intimately involved in our daily lives. These microorganisms have been used in human history for food such as cheese, yogurt, and wine, In more recent years, through metabolic engineering, microorganisms been used extensively as microbial cell factories to manufacture plastics, feed for livestock, dietary supplements, and drugs. However, in addition to these bacteria that are beneficial to human lives, pathogens such as Pneumonia, Salmonella, and Staphylococcus that cause various infectious diseases are also ubiquitously present. It is important to be able to metabolically control these beneficial industrial bacteria for high value-added chemicals production and to manipulate harmful pathogens to suppress its pathogenic traits. KAIST (President Kwang Hyung Lee) announced on the 10th that a research team led by Distinguished Professor Sang Yup Lee of the Department of Biochemical Engineering has developed a new sRNA tool that can effectively inhibit target genes in various bacteria, including both Gram-negative and Gram-positive bacteria. The research results were published online on April 24 in Nature Communications. ※ Thesis title: Targeted and high-throughput gene knockdown in diverse bacteria using synthetic sRNAs ※ Author information : Jae Sung Cho (co-1st), Dongsoo Yang (co-1st), Cindy Pricilia Surya Prabowo (co-author), Mohammad Rifqi Ghiffary (co-author), Taehee Han (co-author), Kyeong Rok Choi (co-author), Cheon Woo Moon (co-author), Hengrui Zhou (co-author), Jae Yong Ryu (co-author), Hyun Uk Kim (co-author) and Sang Yup Lee (corresponding author). sRNA is an effective tool for synthesizing and regulating target genes in E. coli, but it has been difficult to apply to industrially useful Gram-positive bacteria such as Bacillus subtilis and Corynebacterium in addition to Gram-negative bacteria such as E. coli. To address this issue, a research team led by Distinguished Professor Lee Sang Yup Lee of the Department of Chemical and Biomolecular Engineering at KAIST developed a new sRNA platform that can effectively suppress target genes in various bacteria, including both Gram-negative and positive bacteria. The research team surveyed thousands of microbial-derived sRNA systems in the microbial database, and eventually designated the sRNA system derived from 'Bacillus subtilis' that showed the highest gene knockdown efficiency, and designated it as “Broad-Host-Range sRNA”, or BHR-sRNA. A similar well-known system is the CRISPR interference (CRISPRi) system, which is a modified CRISPR system that knocks down gene expression by suppressing the gene transcription process. However, the Cas9 protein in the CRISPRi system has a very high molecular weight, and there have been reports growth inhibition in bacteria. The BHR-sRNA system developed in this study did not affect bacterial growth while showing similar gene knockdown efficiencies to CRISPRi. < Figure 1. a) Schematic illustration demonstrating the mechanism of syntetic sRNA b) Phylogenetic tree of the 16 Gram-negative and Gram-positive bacterial species tested for gene knockdown by the BHR-sRNA system. > To validate the versatility of the BHR-sRNA system, 16 different gram-negative and gram-positive bacteria were selected and tested, where the BHR-sRNA system worked successfully in 15 of them. In addition, it was demonstrated that the gene knockdown capability was more effective than that of the existing E. coli-based sRNA system in 10 bacteria. The BHR-sRNA system proved to be a universal tool capable of effectively inhibiting gene expression in various bacteria. In order to address the problem of antibiotic-resistant pathogens that have recently become more serious, the BHR-sRNA was demonstrated to suppress the pathogenicity by suppressing the gene producing the virulence factor. By using BHR-sRNA, biofilm formation, one of the factors resulting in antibiotic resistance, was inhibited by 73% in Staphylococcus epidermidis a pathogen that can cause hospital-acquired infections. Antibiotic resistance was also weakened by 58% in the pneumonia causing bacteria Klebsiella pneumoniae. In addition, BHR-sRNA was applied to industrial bacteria to develop microbial cell factories to produce high value-added chemicals with better production performance. Notably, superior industrial strains were constructed with the aid of BHR-sRNA to produce the following chemicals: valerolactam, a raw material for polyamide polymers, methyl-anthranilate, a grape-flavor food additive, and indigoidine, a blue-toned natural dye. The BHR-sRNA developed through this study will help expedite the commercialization of bioprocesses to produce high value-added compounds and materials such as artificial meat, jet fuel, health supplements, pharmaceuticals, and plastics. It is also anticipated that to help eradicating antibiotic-resistant pathogens in preparation for another upcoming pandemic. “In the past, we could only develop new tools for gene knockdown for each bacterium, but now we have developed a tool that works for a variety of bacteria” said Distinguished Professor Sang Yup Lee. This work was supported by the Development of Next-generation Biorefinery Platform Technologies for Leading Bio-based Chemicals Industry Project and the Development of Platform Technologies of Microbial Cell Factories for the Next-generation Biorefineries Project from NRF supported by the Korean MSIT.

-

A biohybrid system to extract 20 times more biopla..

As the issues surrounding global climate change intensify, more attention and determined efforts are required to re-grasp the issue as a state of “crisis” and respond to it properly. Among the various methods of recycling CO2, the electrochemical CO2 conversion technology is a technology that can convert CO2 into useful chemical substances using electrical energy. Since it is easy to operate facilities and can use the electricity from renewable sources like the solar cells or the wind power, it has received a lot of attention as an eco-friendly technology can contribute to reducing greenhouse gases and achieve carbon neutrality. KAIST (President Kwang Hyung Lee) announced on the 30th that the joint research team led by Professor Hyunjoo Lee and Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering succeeded in developing a technology that produces bioplastics from CO2 with high efficiency by developing a hybrid system that interlinked the electrochemical CO2 conversion and microbial bio conversion methods together. The results of the research, which showed the world's highest productivity by more than 20 times compared to similar systems, were published online on March 27th in the "Proceedings of the National Academy of Sciences (PNAS)". ※ Paper title: Biohybrid CO2 electrolysis for the direct synthesis of polyesters from CO2 ※ Author information: Jinkyu Lim (currently at Stanford Linear Accelerator Center, co-first author), So Young Choi (KAIST, co-first author), Jae Won Lee (KAIST, co-first author), Hyunjoo Lee (KAIST, corresponding author), Sang Yup Lee (KAIST, corresponding author) For the efficient conversion of CO2, high-efficiency electrode catalysts and systems are actively being developed. As conversion products, only compounds containing one or up to three carbon atoms are produced on a limited basis. Compounds of one carbon, such as CO, formic acid, and ethylene, are produced with relatively high efficiency. Liquid compounds of several carbons, such as ethanol, acetic acid, and propanol, can also be produced by these systems, but due to the nature of the chemical reaction that requires more electrons, there are limitations involving the conversion efficiency and the product selection. Accordingly, a joint research team led by Professor Hyunjoo Lee and Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering at KAIST developed a technology to produce bioplastics from CO2 by linking electrochemical conversion technology with bioconversion method that uses microorganisms. This electrochemical-bio hybrid system is in the form of having an electrolyzer, in which electrochemical conversion reactions occur, connected to a fermenter, in which microorganisms are cultured. When CO2 is converted to formic acid in the electrolyzer, and it is fed into the fermenter in which the microbes like the Cupriavidus necator, in this case, consumes the carbon source to produce polyhydroxyalkanoate (PHA), a microbial-derived bioplastic. According to the research results of the existing hybrid concepts, there was a disadvantage of having low productivity or stopping at a non-continuous process due to problems of low efficiency of the electrolysis and irregular results arising from the culturing conditions of the microbes. In order to overcome these problems, the joint research team made formic acid with a gas diffusion electrode using gaseous CO2. In addition, the team developed a 'physiologically compatible catholyte' that can be used as a culture medium for microorganisms as well as an electrolyte that allows the electrolysis to occur sufficiently without inhibiting the growth of microorganisms, without having to have a additional separation and purification process, which allowed the acide to be supplied directly to microorganisms. Through this, the electrolyte solution containing formic acid made from CO2 enters the fermentation tank, is used for microbial culture, and enters the electrolyzer to be circulated, maximizing the utilization of the electrolyte solution and remaining formic acid. In addition, a filter was installed to ensure that only the electrolyte solution with any and all microorganisms that can affect the electrosis filtered out is supplied back to the electrolyzer, and that the microorganisms exist only in the fermenter, designing the two system to work well together with utmost efficiency. Through the developed hybrid system, the produced bioplastic, poly-3-hydroxybutyrate (PHB), of up to 83% of the cell dry weight was produced from CO2, which produced 1.38g of PHB from a 4 cm2 electrode, which is the world's first gram(g) level production and is more than 20 times more productive than previous research. In addition, the hybrid system is expected to be applied to various industrial processes in the future as it shows promises of the continuous culture system. The corresponding authors, Professor Hyunjoo Lee and Distinguished Professor Sang Yup Lee noted that “The results of this research are technologies that can be applied to the production of various chemical substances as well as bioplastics, and are expected to be used as key parts needed in achieving carbon neutrality in the future.” This research was received and performed with the supports from the CO2 Reduction Catalyst and Energy Device Technology Development Project, the Heterogeneous Atomic Catalyst Control Project, and the Next-generation Biorefinery Source Technology Development Project to lead the Biochemical Industry of the Oil-replacement Eco-friendly Chemical Technology Development Program by the Ministry of Science and ICT. Figure 1. Schematic diagram and photo of the biohybrid CO2 electrolysis system. (A) A conceptual scheme and (B) a photograph of the biohybrid CO2 electrolysis system. (C) A detailed scheme of reaction inside the system. Gaseous CO2 was converted to formate in the electrolyzer, and the formate was converted to PHB by the cells in the fermenter. The catholyte was developed so that it is compatible with both CO2 electrolysis and fermentation and was continuously circulated.

-

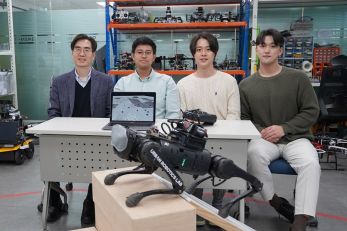

KAIST debuts “DreamWaQer” - a quadrupedal robot th..

- The team led by Professor Hyun Myung of the School of Electrical Engineering developed “DreamWaQ”, a deep reinforcement learning-based walking robot control technology that can walk in an atypical environment without visual and/or tactile information - Utilization of “DreamWaQ” technology can enable mass production of various types of “DreamWaQers” - Expected to be used in exploration of atypical environment involving unique circumstances such as disasters by fire. A team of Korean engineering researchers has developed a quadrupedal robot technology that can climb up and down the steps and moves without falling over in uneven environments such as tree roots without the help of visual or tactile sensors even in disastrous situations in which visual confirmation is impeded due to darkness or thick smoke from the flames. KAIST (President Kwang Hyung Lee) announced on the 29th of March that Professor Hyun Myung's research team at the Urban Robotics Lab in the School of Electrical Engineering developed a walking robot control technology that enables robust 'blind locomotion' in various atypical environments. < (From left) Prof. Hyun Myung, Doctoral Candidates I Made Aswin Nahrendra, Byeongho Yu, and Minho Oh. In the foreground is the DreamWaQer, a quadrupedal robot equipped with DreamWaQ technology. > The KAIST research team developed "DreamWaQ" technology, which was named so as it enables walking robots to move about even in the dark, just as a person can walk without visual help fresh out of bed and going to the bathroom in the dark. With this technology installed atop any legged robots, it will be possible to create various types of "DreamWaQers". Existing walking robot controllers are based on kinematics and/or dynamics models. This is expressed as a model-based control method. In particular, on atypical environments like the open, uneven fields, it is necessary to obtain the feature information of the terrain more quickly in order to maintain stability as it walks. However, it has been shown to depend heavily on the cognitive ability to survey the surrounding environment. In contrast, the controller developed by Professor Hyun Myung's research team based on deep reinforcement learning (RL) methods can quickly calculate appropriate control commands for each motor of the walking robot through data of various environments obtained from the simulator. Whereas the existing controllers that learned from simulations required a separate re-orchestration to make it work with an actual robot, this controller developed by the research team is expected to be easily applied to various walking robots because it does not require an additional tuning process. DreamWaQ, the controller developed by the research team, is largely composed of a context estimation network that estimates the ground and robot information and a policy network that computes control commands. The context-aided estimator network estimates the ground information implicitly and the robot’s status explicitly through inertial information and joint information. This information is fed into the policy network to be used to generate optimal control commands. Both networks are learned together in the simulation. While the context-aided estimator network is learned through supervised learning, the policy network is learned through an actor-critic architecture, a deep RL methodology. The actor network can only implicitly infer surrounding terrain information. In the simulation, the surrounding terrain information is known, and the critic, or the value network, that has the exact terrain information evaluates the policy of the actor network. This whole learning process takes only about an hour in a GPU-enabled PC, and the actual robot is equipped with only the network of learned actors. Without looking at the surrounding terrain, it goes through the process of imagining which environment is similar to one of the various environments learned in the simulation using only the inertial sensor (IMU) inside the robot and the measurement of joint angles. If it suddenly encounters an offset, such as a staircase, it will not know until its foot touches the step, but it will quickly draw up terrain information the moment its foot touches the surface. Then the control command suitable for the estimated terrain information is transmitted to each motor, enabling rapidly adapted walking. The DreamWaQer robot walked not only in the laboratory environment, but also in on outdoor environment around the campus with many curbs and speed bumps, and over a field with many tree roots and gravel, demonstrating its abilities by overcoming a staircase with a difference of a height that is two-thirds of its body. In addition, regardless of the environment, the research team confirmed that it was capable of stable walking ranging from a slow speed of 0.3 m/s to a rather fast speed of 1.0 m/s. The results of this study were produced by a student in doctorate course, I Made Aswin Nahrendra, as the first author, and his colleague Byeongho Yu as a co-author. It has been accepted to be presented at the upcoming IEEE International Conference on Robotics and Automation (ICRA) scheduled to be held in London at the end of May. (Paper title: DreamWaQ: Learning Robust Quadrupedal Locomotion With Implicit Terrain Imagination via Deep Reinforcement Learning) The videos of the walking robot DreamWaQer equipped with the developed DreamWaQ can be found at the address below. Main Introduction: https://youtu.be/JC1_bnTxPiQ Experiment Sketches: https://youtu.be/mhUUZVbeDA0 Meanwhile, this research was carried out with the support from the Robot Industry Core Technology Development Program of the Ministry of Trade, Industry and Energy (MOTIE). (Task title: Development of Mobile Intelligence SW for Autonomous Navigation of Legged Robots in Dynamic and Atypical Environments for Real Application) < Figure 1. Overview of DreamWaQ, a controller developed by this research team. This network consists of an estimator network that learns implicit and explicit estimates together, a policy network that acts as a controller, and a value network that provides guides to the policies during training. When implemented in a real robot, only the estimator and policy network are used. Both networks run in less than 1 ms on the robot's on-board computer. > < Figure 2. Since the estimator can implicitly estimate the ground information as the foot touches the surface, it is possible to adapt quickly to rapidly changing ground conditions. > < Figure 3. Results showing that even a small walking robot was able to overcome steps with height differences of about 20cm. >