Kaist

Korean

Faculty News

-

Professor Kang’s Team Receives the IEEE Jack Newba..

< Professor Kang (far left), Professor Sung-Ah Chung at KNU, and Professor Osvaldo Simeone of KCL > Professor Joonhyuk Kang of the School of Electrical Engineering received the IEEE Vehicular Technology Society’s 2021 Jack Neubauer Memorial Award for his team’s paper published in IEEE Transactions on Vehicular Technology. The Jack Neubauer Memorial Award recognizes the best paper published in the IEEE Transactions on Vehicular Technology journal in the last five years. The team of authors, Professor Kang, Professor Sung-Ah Chung at Kyungpook National University, and Professor Osvaldo Simeone of King's College London reported their research titled Mobile Edge Computing via a UAV-Mounted Cloudlet: Optimization of Bit Allocation and Path Planning in IEEE Transactions on Vehicular Technology, Vol. 67, No. 3, pp. 2049-2063, in March 2018. Their paper shows how the trajectory of aircraft is optimized and resources are allocated when unmanned aerial vehicles perform edge computing to help mobile device calculations. This paper has currently recorded nearly 400 citations (based on Google Scholar). "We are very happy to see the results of proposing edge computing using unmanned aerial vehicles by applying optimization theory, and conducting research on trajectory and resource utilization of unmanned aerial vehicles that minimize power consumption," said Professor Kang.

-

Hydrogel-Based Flexible Brain-Machine Interface

The interface is easy to insert into the body when dry, but behaves ‘stealthily’ inside the brain when wet < Figure 1. Schematic of Hydrogel Hybrid Brain-Machine Interfaces > Professor Seongjun Park’s research team and collaborators revealed a newly developed hydrogel-based flexible brain-machine interface. To study the structure of the brain or to identify and treat neurological diseases, it is crucial to develop an interface that can stimulate the brain and detect its signals in real time. However, existing neural interfaces are mechanically and chemically different from real brain tissue. This causes foreign body response and forms an insulating layer (glial scar) around the interface, which shortens its lifespan. To solve this problem, the research team developed a ‘brain-mimicking interface’ by inserting a custom-made multifunctional fiber bundle into the hydrogel body. The device is composed not only of an optical fiber that controls specific nerve cells with light in order to perform optogenetic procedures, but it also has an electrode bundle to read brain signals and a microfluidic channel to deliver drugs to the brain. The interface is easy to insert into the body when dry, as hydrogels become solid. But once in the body, the hydrogel will quickly absorb body fluids and resemble the properties of its surrounding tissues, thereby minimizing foreign body response. The research team applied the device on animal models, and showed that it was possible to detect neural signals for up to six months, which is far beyond what had been previously recorded. It was also possible to conduct long-term optogenetic and behavioral experiments on freely moving mice with a significant reduction in foreign body responses such as glial and immunological activation compared to existing devices. “This research is significant in that it was the first to utilize a hydrogel as part of a multifunctional neural interface probe, which increased its lifespan dramatically,” said Professor Park. “With our discovery, we look forward to advancements in research on neurological disorders like Alzheimer’s or Parkinson’s disease that require long-term observation.” The research was published in Nature Communications on June 8, 2021. (Title: Adaptive and multifunctional hydrogel hybrid probes for long-term sensing and modulation of neural activity) The study was conducted jointly with an MIT research team composed of Professor Polina Anikeeva, Professor Xuanhe Zhao, and Dr. Hyunwoo Yook. This research was supported by the National Research Foundation (NRF) grant for emerging research, Korea Medical Device Development Fund, KK-JRC Smart Project, KAIST Global Initiative Program, and Post-AI Project. < Figure 2. Design and Fabrication of Multifunctional Hydrogel Hybrid Probes > -Publication Park, S., Yuk, H., Zhao, R. et al. Adaptive and multifunctional hydrogel hybrid probes for long-term sensing and modulation of neural activity. Nat Commun 12, 3435 (2021). https://doi.org/10.1038/s41467-021-23802-9 -Profile Professor Seongjun Park Bio and Neural Interfaces Laboratory Department of Bio and Brain Engineering KAIST

-

Biomimetic Resonant Acoustic Sensor Detecting Far-..

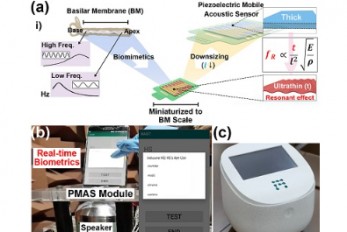

A KAIST research team led by Professor Keon Jae Lee from the Department of Materials Science and Engineering has developed a bioinspired flexible piezoelectric acoustic sensor with multi-resonant ultrathin piezoelectric membrane mimicking the basilar membrane of the human cochlea. The flexible acoustic sensor has been miniaturized for embedding into smartphones and the first commercial prototype is ready for accurate and far-distant voice detection. In 2018, Professor Lee presented the first concept of a flexible piezoelectric acoustic sensor, inspired by the fact that humans can accurately detect far-distant voices using a multi-resonant trapezoidal membrane with 20,000 hair cells. However, previous acoustic sensors could not be integrated into commercial products like smartphones and AI speakers due to their large device size. In this work, the research team fabricated a mobile-sized acoustic sensor by adopting ultrathin piezoelectric membranes with high sensitivity. Simulation studies proved that the ultrathin polymer underneath inorganic piezoelectric thin film can broaden the resonant bandwidth to cover the entire voice frequency range using seven channels. Based on this theory, the research team successfully demonstrated the miniaturized acoustic sensor mounted in commercial smartphones and AI speakers for machine learning-based biometric authentication and voice processing. (Please refer to the explanatory movie KAIST Flexible Piezoelectric Mobile Acoustic Sensor). The resonant mobile acoustic sensor has superior sensitivity and multi-channel signals compared to conventional condenser microphones with a single channel, and it has shown highly accurate and far-distant speaker identification with a small amount of voice training data. The error rate of speaker identification was significantly reduced by 56% (with 150 training datasets) and 75% (with 2,800 training datasets) compared to that of a MEMS condenser device. Professor Lee said, “Recently, Google has been targeting the ‘Wolverine Project’ on far-distant voice separation from multi-users for next-generation AI user interfaces. I expect that our multi-channel resonant acoustic sensor with abundant voice information is the best fit for this application. Currently, the mass production process is on the verge of completion, so we hope that this will be used in our daily lives very soon.” Professor Lee also established a startup company called Fronics Inc., located both in Korea and U.S. (branch office) to commercialize this flexible acoustic sensor and is seeking collaborations with global AI companies. These research results entitled “Biomimetic and Flexible Piezoelectric Mobile Acoustic Sensors with Multi-Resonant Ultrathin Structures for Machine Learning Biometrics” were published in Science Advances in 2021 (7, eabe5683). < Figure: (a) Schematic illustration of the basilar membrane-inspired flexible piezoelectric mobile acoustic sensor (b) Real-time voice biometrics based on machine learning algorithms (c) The world’s first commercial production of a mobile-sized acoustic sensor. > -Publication “Biomimetic and flexible piezoelectric mobile acoustic sensors with multiresonant ultrathin structures for machine learning biometrics,” Science Advances (DOI: 10.1126/sciadv.abe5683) -Profile Professor Keon Jae Lee Department of Materials Science and Engineering Flexible and Nanobio Device Lab http://fand.kaist.ac.kr/ KAIST

-

Natural Rainbow Colorants Microbially Produced

Integrated strategies of systems metabolic engineering and membrane engineering led to the production of natural rainbow colorants comprising seven natural colorants from bacteria for the first time < Systems metabolic engineering was employed to construct and optimize the metabolic pathways and membrane engineering was employed to increase the production of the target colorants, successfully producing the seven natural colorants covering the complete rainbow spectrum. > A research group at KAIST has engineered bacterial strains capable of producing three carotenoids and four violacein derivatives, completing the seven colors in the rainbow spectrum. The research team integrated systems metabolic engineering and membrane engineering strategies for the production of seven natural rainbow colorants in engineered Escherichia coli strains. The strategies will be also useful for the efficient production of other industrially important natural products used in the food, pharmaceutical, and cosmetic industries. Colorants are widely used in our lives and are directly related to human health when we eat food additives and wear cosmetics. However, most of these colorants are made from petroleum, causing unexpected side effects and health problems. Furthermore, they raise environmental concerns such as water pollution from dyeing fabric in the textiles industry. For these reasons, the demand for the production of natural colorants using microorganisms has increased, but could not be readily realized due to the high cost and low yield of the bioprocesses. These challenges inspired the metabolic engineers at KAIST including researchers Dr. Dongsoo Yang and Dr. Seon Young Park, and Distinguished Professor Sang Yup Lee from the Department of Chemical and Biomolecular Engineering. The team reported the study entitled “Production of rainbow colorants by metabolically engineered Escherichia coli” in Advanced Science online on May 5. It was selected as the journal cover of the July 7 issue. This research reports for the first time the production of rainbow colorants comprising three carotenoids and four violacein derivatives from glucose or glycerol via systems metabolic engineering and membrane engineering. The research group focused on the production of hydrophobic natural colorants useful for lipophilic food and dyeing garments. First, using systems metabolic engineering, which is an integrated technology to engineer the metabolism of a microorganism, three carotenoids comprising astaxanthin (red), -carotene (orange), and zeaxanthin (yellow), and four violacein derivatives comprising proviolacein (green), prodeoxyviolacein (blue), violacein (navy), and deoxyviolacein (purple) could be produced. Thus, the production of natural colorants covering the complete rainbow spectrum was achieved. When hydrophobic colorants are produced from microorganisms, the colorants are accumulated inside the cell. As the accumulation capacity is limited, the hydrophobic colorants could not be produced with concentrations higher than the limit. In this regard, the researchers engineered the cell morphology and generated inner-membrane vesicles (spherical membranous structures) to increase the intracellular capacity for accumulating the natural colorants. To further promote production, the researchers generated outer-membrane vesicles to secrete the natural colorants, thus succeeding in efficiently producing all of seven rainbow colorants. It was even more impressive that the production of natural green and navy colorants was achieved for the first time. “The production of the seven natural rainbow colorants that can replace the current petroleum-based synthetic colorants was achieved for the first time,” said Dr. Dongsoo Yang. He explained that another important point of the research is that integrated metabolic engineering strategies developed from this study can be generally applicable for the efficient production of other natural products useful as pharmaceuticals or nutraceuticals. “As maintaining good health in an aging society is becoming increasingly important, we expect that the technology and strategies developed here will play pivotal roles in producing other valuable natural products of medical or nutritional importance,” explained Distinguished Professor Sang Yup Lee. This work was supported by the "Cooperative Research Program for Agriculture Science & Technology Development (Project No. PJ01550602)" Rural Development Administration, Republic of Korea. -Publication: Dongsoo Yang, Seon Young Park, and Sang Yup Lee. Production of rainbow colorants by metabolically engineered Escherichia coli. Advanced Science, 2100743. -Profile Distinguished Professor Sang Yup Lee Metabolic &Biomolecular Engineering National Research Laboratory http://mbel.kaist.ac.kr Department of Chemical and Biomolecular Engineering KAIST

-

‘Urban Green Space Affects Citizens’ Happiness’

Study finds the relationship between green space, the economy, and happiness < Figure 1. (a) The map of urban green space and happiness in 60 developed countries. The size and color of circles represent the level of happiness and urban green space in a country, respectively. The markers are placed on the most populated cities of each country. (b) Urban green space is measured by the UGS in four world cities. The green areas indicate the adjusted NDVI per capita (i.e., UGS) for every 10m by 10m pixel. > A recent study revealed that as a city becomes more economically developed, its citizens’ happiness becomes more directly related to the area of urban green space. A joint research project by Professor Meeyoung Cha of the School of Computing and her collaborators studied the relationship between green space and citizen happiness by analyzing big data from satellite images of 60 different countries. Urban green space, including parks, gardens, and riversides not only provides aesthetic pleasure, but also positively affects our health by promoting physical activity and social interactions. Most of the previous research attempting to verify the correlation between urban green space and citizen happiness was based on few developed countries. Therefore, it was difficult to identify whether the positive effects of green space are global, or merely phenomena that depended on the economic state of the country. There have also been limitations in data collection, as it is difficult to visit each location or carry out investigations on a large scale based on aerial photographs. The research team used data collected by Sentinel-2, a high-resolution satellite operated by the European Space Agency (ESA) to investigate 90 green spaces from 60 different countries around the world. The subjects of analysis were cities with the highest population densities (cities that contain at least 10% of the national population), and the images were obtained during the summer of each region for clarity. Images from the northern hemisphere were obtained between June and September of 2018, and those from the southern hemisphere were obtained between December of 2017 and February of 2018. The areas of urban green space were then quantified and crossed with data from the World Happiness Report and GDP by country reported by the United Nations in 2018. Using these data, the relationships between green space, the economy, and citizen happiness were analyzed. The results showed that in all cities, citizen happiness was positively correlated with the area of urban green space regardless of the country’s economic state. However, out of the 60 countries studied, the happiness index of the bottom 30 by GDP showed a stronger correlation with economic growth. In countries whose gross national income (GDP per capita) was higher than 38,000 USD, the area of green space acted as a more important factor affecting happiness than economic growth. Data from Seoul was analyzed to represent South Korea, and showed an increased happiness index with increased green areas compared to the past. < Figure 2. The relations of (a) log-GDP and happiness, and (b) urban green space (i.e., UGS) and happiness across 60 developed countries. The top 30 and the lowest 30 countries ranked by GDP are sized by the population size and colored by red and black. The dotted lines are the linear fit for each GDP group. (c) Changes of coefficients between urban green space and happiness for different sets of GDP rank with increasing window size from top 10 to 60. (d) The rank correlations between UGS and happiness for the groups of countries in the increasing GDP rank order. > The authors point out their work has several policy-level implications. First, public green space should be made accessible to urban dwellers to enhance social support. If public safety in urban parks is not guaranteed, its positive role in social support and happiness may diminish. Also, the meaning of public safety may change; for example, ensuring biological safety will be a priority in keeping urban parks accessible during the COVID-19 pandemic. Second, urban planning for public green space is needed for both developed and developing countries. As it is challenging or nearly impossible to secure land for green space after the area is developed, urban planning for parks and green space should be considered in developing economies where new cities and suburban areas are rapidly expanding. Third, recent climate changes can present substantial difficulty in sustaining urban green space. Extreme events such as wildfires, floods, droughts, and cold waves could endanger urban forests while global warming could conversely accelerate tree growth in cities due to the urban heat island effect. Thus, more attention must be paid to predict climate changes and discovering their impact on the maintenance of urban green space. “There has recently been an increase in the number of studies using big data from satellite images to solve social conundrums,” said Professor Cha. “The tool developed for this investigation can also be used to quantify the area of aquatic environments like lakes and the seaside, and it will now be possible to analyze the relationship between citizen happiness and aquatic environments in future studies,” she added. Professor Woo Sung Jung from POSTECH and Professor Donghee Wohn from the New Jersey Institute of Technology also joined this research. It was reported in the online issue of EPJ Data Science on May 30. -Publication Oh-Hyun Kwon, Inho Hong, Jeasurk Yang, Donghee Y. Wohn, Woo-Sung Jung, and Meeyoung Cha, 2021. Urban green space and happiness in developed countries. EPJ Data Science. DOI: https://doi.org/10.1140/epjds/s13688-021-00278-7 -Profile Professor Meeyoung Cha Data Science Lab https://ds.ibs.re.kr/ School of Computing KAIST

-

Ultrafast, on-Chip PCR Could Speed Up Diagnoses du..

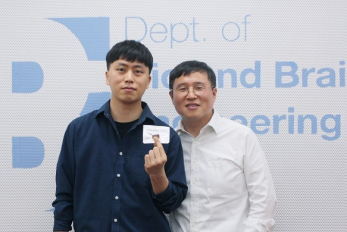

A rapid point-of-care diagnostic plasmofluidic chip can deliver result in only 8 minutes < Professor Ki-Hun Chung(right) and PhD candidate Byoung-Hoon Kang pose with their vacuum-charged plasmofluidic PCR chip. > Reverse transcription-polymerase chain reaction (RT-PCR) has been the gold standard for diagnosis during the COVID-19 pandemic. However, the PCR portion of the test requires bulky, expensive machines and takes about an hour to complete, making it difficult to quickly diagnose someone at a testing site. Now, researchers at KAIST have developed a plasmofluidic chip that can perform PCR in only about 8 minutes, which could speed up diagnoses during current and future pandemics. The rapid diagnosis of COVID-19 and other highly contagious viral diseases is important for timely medical care, quarantining and contact tracing. Currently, RT-PCR uses enzymes to reverse transcribe tiny amounts of viral RNA to DNA, and then amplifies the DNA so that it can be detected by a fluorescent probe. It is the most sensitive and reliable diagnostic method. But because the PCR portion of the test requires 30-40 cycles of heating and cooling in special machines, it takes about an hour to perform, and samples must typically be sent away to a lab, meaning that a patient usually has to wait a day or two to receive their diagnosis. Professor Ki-Hun Jeong at the Department of Bio and Brain Engineering and his colleagues wanted to develop a plasmofluidic PCR chip that could quickly heat and cool miniscule volumes of liquids, allowing accurate point-of-care diagnoses in a fraction of the time. The research was reported in ACS Nano on May 19. The researchers devised a postage stamp-sized polydimethylsiloxane chip with a microchamber array for the PCR reactions. When a drop of a sample is added to the chip, a vacuum pulls the liquid into the microchambers, which are positioned above glass nanopillars with gold nanoislands. Any microbubbles, which could interfere with the PCR reaction, diffuse out through an air-permeable wall. When a white LED is turned on beneath the chip, the gold nanoislands on the nanopillars quickly convert light to heat, and then rapidly cool when the light is switched off. The researchers tested the device on a piece of DNA containing a SARS-CoV-2 gene, accomplishing 40 heating and cooling cycles and fluorescence detection in only 5 minutes, with an additional 3 minutes for sample loading. The amplification efficiency was 91%, whereas a comparable conventional PCR process has an efficiency of 98%. With the reverse transcriptase step added prior to sample loading, the entire testing time with the new method could take 10-13 minutes, as opposed to about an hour for typical RT-PCR testing. The new device could provide many opportunities for rapid point-of-care diagnostics during a pandemic, the researchers say. < Vacuum-charged plasmofluidic PCR chip for real-time nanoplasmonic on-chip PCR (left) and ultrafast thermal cycling with amplification curve of plasmids expressing SARS-CoV-2 envelope protein (right). > -Sources Ultrafast and Real-Time Nanoplasmonic On-Chip Polymerase Chain Reaction for Rapid and Quantitative Molecular Diagnostics ACS Nano (https://doi.org/10.1021/acsnano.1c02154) -Professor Ki-Hun Jeong Biophotonics Laboratory https://biophotonics.kaist.ac.kr/ Department of Bio and Brain Engineeinrg KAIST

-

Wearable Device to Monitor Sweat in Real Time

An on-skin platform for the wireless monitoring of flow rate, cumulative loss, and temperature of sweat in real time < Sweat as a source of biomarkers. A wireless electronic patch (left) measures sweat’s volumetric flow and cumulative loss. It can be combined with the microfluidic system (right) that provides pH measurements and determines the concentration of chloride, creatine, and glucose in a user’s sweat. These indicators could be used to diagnose cystic fibrosis, diabetes, kidney dysfunction, and metabolic alkalosis. > An electronic patch can monitor your sweating and check your health status. Even more, the soft microfluidic device that adheres to the surface of the skin, captures, stores, and performs biomarker analysis of sweat as it is released through the eccrine glands. This wearable and wireless electronic device developed by Professor Kyeongha Kwon and her collaborators is a digital and wireless platform that could help track the so-called ‘filling process’ of sweat without having to visually examine the device. The platform was integrated with microfluidic systems to analyze the sweat’s components. To monitor the sweat release rate in real time, the researchers created a ‘thermal flow sensing module.’ They designed a sophisticated microfluidic channel to allow the collected sweat to flow through a narrow passage and a heat source was placed on the outer surface of the channel to induce a heat exchange between the sweat and the heated channel. As a result, the researchers could develop a wireless electronic patch that can measure the temperature difference in a specific location upstream and downstream of the heat source with an electronic circuit and convert it into a digital signal to measure the sweat release rate in real time. The patch accurately measured the perspiration rate in the range of 0-5 microliters/minute (μl/min), which was considered physiologically significant. The sensor can measure the flow of sweat directly and then use the information it collected to quantify total sweat loss. Moreover, the device features advanced microfluidic systems and colorimetric chemical reagents to gather pH measurements and determine the concentration of chloride, creatinine, and glucose in a user's sweat. Professor Kwon said that these indicators could be used to diagnose various diseases related with sweating such as cystic fibrosis, diabetes, kidney dysfunction, and metabolic alkalosis. “As the sweat flowing in the microfluidic channel is completely separated from the electronic circuit, the new patch overcame the shortcomings of existing flow rate measuring devices, which were vulnerable to corrosion and aging,” she explained. The patch can be easily attached to the skin with flexible circuit board printing technology and silicone sealing technology. It has an additional sensor that detects changes in skin temperature. Using a smartphone app, a user can check the data measured by the wearable patch in real time. Professor Kwon added, “This patch can be widely used for personal hydration strategies, the detection of dehydration symptoms, and other health management purposes. It can also be used in a systematic drug delivery system, such as for measuring the blood flow rate in blood vessels near the skin’s surface or measuring a drug’s release rate in real time to calculate the exact dosage.” -Publication Kyeongha Kwon, Jong Uk Kim, John A. Rogers, et al. “An on-skin platform for wireless monitoring of flow rate, cumulative loss and temperature of sweat in real time.” Nature Electronics (doi.org/10.1038/s41928-021-00556-2) -Profile Professor Kyeongha Kwon School of Electrical Engineering KAIST

-

Distinguished Professor Sang Yup Lee Honored with ..

< Distinguished Professor Sang Yup Lee > Vice President for Research Sang Yup Lee received the 2021 Charles D. Scott Award from the Society for Industrial Microbiology and Biotechnology. Distinguished Professor Lee from the Department of Chemical and Biomolecular Engineering at KAIST is the first Asian awardee. The Charles D. Scott Award, initiated in 1995, recognizes individuals who have made significant contributions to enable and further the use of biotechnology to produce fuels and chemicals. The award is named in honor of Dr. Charles D. Scott, who founded the Symposium on Biomaterials, Fuels, and Chemicals and chaired the conference for its first ten years. Professor Lee has pioneered systems metabolic engineering and developed various micro-organisms capable of producing a wide range of fuels, chemicals, materials, and natural compounds, many of them for the first time. Some of the breakthroughs include the microbial production of gasoline, diacids, diamines, PLA and PLGA polymers, and several natural products. More recently, his team has developed a microbial strain capable of the mass production of succinic acid, a monomer for manufacturing polyester, with the highest production efficiency to date, as well as a Corynebacterium glutamicum strain capable of producing high-level glutaric acid. They also engineered for the first time a bacterium capable of producing carminic acid, a natural red colorant that is widely used for food and cosmetics. Professor Lee is one of the Highly Cited Researchers (HCR), ranked in the top 1% by citations in their field by Clarivate Analytics for four consecutive years from 2017. He is the first Korean fellow ever elected into the National Academy of Inventors in the US and one of 13 scholars elected as an International Member of both the National Academy of Sciences and the National Academy of Engineering in the USA. The awards ceremony will take place during the Symposium on Biomaterials, Fuels, and Chemicals held online from April 26.

-

T-GPS Processes a Graph with Trillion Edges on a S..

Trillion-scale graph processing simulation on a single computer presents a new concept of graph processing A KAIST research team has developed a new technology that enables to process a large-scale graph algorithm without storing the graph in the main memory or on disks. Named as T-GPS (Trillion-scale Graph Processing Simulation) by the developer Professor Min-Soo Kim from the School of Computing at KAIST, it can process a graph with one trillion edges using a single computer. Graphs are widely used to represent and analyze real-world objects in many domains such as social networks, business intelligence, biology, and neuroscience. As the number of graph applications increases rapidly, developing and testing new graph algorithms is becoming more important than ever before. Nowadays, many industrial applications require a graph algorithm to process a large-scale graph (e.g., one trillion edges). So, when developing and testing graph algorithms such for a large-scale graph, a synthetic graph is usually used instead of a real graph. This is because sharing and utilizing large-scale real graphs is very limited due to their being proprietary or being practically impossible to collect. Conventionally, developing and testing graph algorithms is done via the following two-step approach: generating and storing a graph and executing an algorithm on the graph using a graph processing engine. The first step generates a synthetic graph and stores it on disks. The synthetic graph is usually generated by either parameter-based generation methods or graph upscaling methods. The former extracts a small number of parameters that can capture some properties of a given real graph and generates the synthetic graph with the parameters. The latter upscales a given real graph to a larger one so as to preserve the properties of the original real graph as much as possible. The second step loads the stored graph into the main memory of the graph processing engine such as Apache GraphX and executes a given graph algorithm on the engine. Since the size of the graph is too large to fit in the main memory of a single computer, the graph engine typically runs on a cluster of several tens or hundreds of computers. Therefore, the cost of the conventional two-step approach is very high. The research team solved the problem of the conventional two-step approach. It does not generate and store a large-scale synthetic graph. Instead, it just loads the initial small real graph into main memory. Then, T-GPS processes a graph algorithm on the small real graph as if the large-scale synthetic graph that should be generated from the real graph exists in main memory. After the algorithm is done, T-GPS returns the exactly same result as the conventional two-step approach. The key idea of T-GPS is generating only the part of the synthetic graph that the algorithm needs to access on the fly and modifying the graph processing engine to recognize the part generated on the fly as the part of the synthetic graph actually generated. The research team showed that T-GPS can process a graph of 1 trillion edges using a single computer, while the conventional two-step approach can only process of a graph of 1 billion edges using a cluster of eleven computers of the same specification. Thus, T-GPS outperforms the conventional approach by 10,000 times in terms of computing resources. The team also showed that the speed of processing an algorithm in T-GPS is up to 43 times faster than the conventional approach. This is because T-GPS has no network communication overhead, while the conventional approach has a lot of communication overhead among computers. Professor Kim believes that this work will have a large impact on the IT industry where almost every area utilizes graph data, adding, “T-GPS can significantly increase both the scale and efficiency of developing a new graph algorithm.” This work was supported by the National Research Foundation (NRF) of Korea and Institute of Information & communications Technology Planning & Evaluation (IITP). -Publication: Park, H., et al. (2021) “Trillion-scale Graph Processing Simulation based on Top-Down Graph Upscaling,” IEEE ICDE 2021, Chania, Greece, Apr. 19-22, 2021. Available online at https://conferences.computer.org/icdepub -Profile: Min-Soo Kim Associate Professor http://infolab.kaist.ac.kr School of Computing KAIST

-

Mobile Clinic Module Wins Red Dot and iF Design Aw..

< Mobile Clinic Module developed by the Korea Aid for Respiratory Epidemic initiative. > The Mobile Clinic Module (MCM), an inflatable negative pressure ward building system developed by the Korea Aid for Respiratory Epidemic (KARE) initiative at KAIST, gained international acclaim by winning the prestigious Red Dot Design Award and iF Design Award. The MCM was recognized as one of the Red Dot Product Designs of the Year. It also won four iF Design Awards in communication design, interior architecture, user interface, and user experience. Winning the two most influential design awards demonstrates how product design can make a valuable contribution to help contain pandemics and reflects new consumer trends for dealing with pandemics. Designed to be patient friendly, even in the extreme medical situations such as pandemics or triage, the MCM is the result of collaborations among researchers in a variety of fields including mechanical engineering, computing, industrial and systems engineering, medical hospitals, and engineering companies. The research team was led by Professor Tek-Jin Nam from the Department of Industrial Design. The MCM is expandable, moveable, and easy to store through a combination of negative pressure frames, air tents, and multi-functional panels. Positive air pressure devices supply fresh air from outside the tent. An air pump and controller maintain air beam pressure, while filtering exhausted air from inside. An internal air information monitoring system efficiently controls inside air pressure and purifies the air. It requires only one-fourth of the volume of existing wards and takes up approximately 40% of their weight. The unit can be transported in a 40-foot container truck. MCMs are now located at the Korea Institute of Radiological & Medical Sciences and Jeju Vaccine Center and expect to be used at many other facilities. KARE is developing antiviral solutions and devices such as protective gear, sterilizers, and test kits to promptly respond to the pandemic. More than 100 researchers at KAIST are collaborating with industry and clinical hospitals to develop antiviral technologies that will improve preventive measures, diagnoses, and treatments. Professor Nam said, “Our designers will continue to identify the most challenging issues, and try to resolve them by realizing user-friendly functions. We believe this will significantly contribute to relieving the drastic need for negative pressure beds and provide a place for monitoring patients with moderate symptoms. We look forward to the MCM upgrading epidemic management resources around the globe.” (END)

-

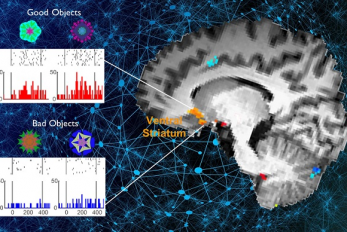

What Guides Habitual Seeking Behavior Explained

A new role of the ventral striatum explains habitual seeking behavior Researchers have been investigating how the brain controls habitual seeking behaviors such as addiction. A recent study by Professor Sue-Hyun Lee from the Department of Bio and Brain Engineering revealed that a long-term value memory maintained in the ventral striatum in the brain is a neural basis of our habitual seeking behavior. This research was conducted in collaboration with the research team lead by Professor Hyoung F. Kim from Seoul National University. Given that addictive behavior is deemed a habitual one, this research provides new insights for developing therapeutic interventions for addiction. Habitual seeking behavior involves strong stimulus responses, mostly rapid and automatic ones. The ventral striatum in the brain has been thought to be important for value learning and addictive behaviors. However, it was unclear if the ventral striatum processes and retains long-term memories that guide habitual seeking. Professor Lee’s team reported a new role of the human ventral striatum where long-term memory of high-valued objects are retained as a single representation and may be used to evaluate visual stimuli automatically to guide habitual behavior. < The ventral striatum shows increased responses to high-valued objects (good objects) after habitual seeking training. > “Our findings propose a role of the ventral striatum as a director that guides habitual behavior with the script of value information written in the past,” said Professor Lee. The research team investigated whether learned values were retained in the ventral striatum while the subjects passively viewed previously learned objects in the absence of any immediate outcome. Neural responses in the ventral striatum during the incidental perception of learned objects were examined using fMRI and single-unit recording. The study found significant value discrimination responses in the ventral striatum after learning and a retention period of several days. Moreover, the similarity of neural representations for good objects increased after learning, an outcome positively correlated with the habitual seeking response for good objects. “These findings suggest that the ventral striatum plays a role in automatic evaluations of objects based on the neural representation of positive values retained since learning, to guide habitual seeking behaviors,” explained Professor Lee. “We will fully investigate the function of different parts of the entire basal ganglia including the ventral striatum. We also expect that this understanding may lead to the development of better treatment for mental illnesses related to habitual behaviors or addiction problems.” This study, supported by the National Research Foundation of Korea, was reported at Nature Communications (https://doi.org/10.1038/s41467-021-22335-5.) -Profile Professor Sue-Hyun Lee Department of Bio and Brain Engineering Memory and Cognition Laboratory http://memory.kaist.ac.kr/lecture KAIST

-

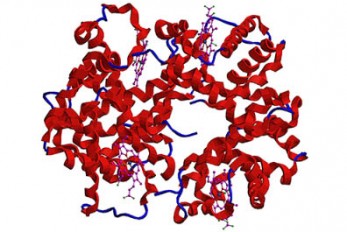

Microbial Production of a Natural Red Colorant Car..

Metabolic engineering and computer-simulated enzyme engineering led to the production of carminic acid, a natural red colorant, from bacteria for the first time < Figure: A schematic biosynthetic pathway for the production of carminic acid from glucose. Biochemical reaction analysis and computer simulation-assisted enzyme engineering was employed to identify and improve the enzymes (DnrFP217K and GtCGTV93Q/Y193F) responsible for the latter two reactions. > A research group at KAIST has engineered a bacterium capable of producing a natural red colorant, carminic acid, which is widely used for food and cosmetics. The research team reported the complete biosynthesis of carminic acid from glucose in engineered Escherichia coli. The strategies will be useful for the design and construction of biosynthetic pathways involving unknown enzymes and consequently the production of diverse industrially important natural products for the food, pharmaceutical, and cosmetic industries. Carminic acid is a natural red colorant widely being used for products such as strawberry milk and lipstick. However, carminic acid has been produced by farming cochineals, a scale insect which only grows in the region around Peru and Canary Islands, followed by complicated multi-step purification processes. Moreover, carminic acid often contains protein contaminants that cause allergies so many people are unwilling to consume products made of insect-driven colorants. On that account, manufacturers around the world are using alternative red colorants despite the fact that carminic acid is one of the most stable natural red colorants. These challenges inspired the metabolic engineering research group at KAIST to address this issue. Its members include postdoctoral researchers Dongsoo Yang and Woo Dae Jang, and Distinguished Professor Sang Yup Lee of the Department of Chemical and Biomolecular Engineering. This study entitled “Production of carminic acid by metabolically engineered Escherichia coli” was published online in the Journal of the American Chemical Society (JACS) on April 2. This research reports for the first time the development of a bacterial strain capable of producing carminic acid from glucose via metabolic engineering and computer simulation-assisted enzyme engineering. The research group optimized the type II polyketide synthase machinery to efficiently produce the precursor of carminic acid, flavokermesic acid. Since the enzymes responsible for the remaining two reactions were neither discovered nor functional, biochemical reaction analysis was performed to identify enzymes that can convert flavokermesic acid into carminic acid. Then, homology modeling and docking simulations were performed to enhance the activities of the two identified enzymes. The team could confirm that the final engineered strain could produce carminic acid directly from glucose. The C-glucosyltransferase developed in this study was found to be generally applicable for other natural products as showcased by the successful production of an additional product, aloesin, which is found in aloe leaves. “The most important part of this research is that unknown enzymes for the production of target natural products were identified and improved by biochemical reaction analyses and computer simulation-assisted enzyme engineering,” says Dr. Dongsoo Yang. He explained the development of a generally applicable C-glucosyltransferase is also useful since C-glucosylation is a relatively unexplored reaction in bacteria including Escherichia coli. Using the C-glucosyltransferase developed in this study, both carminic acid and aloesin were successfully produced from glucose. “A sustainable and insect-free method of producing carminic acid was achieved for the first time in this study. Unknown or inefficient enzymes have always been a major problem in natural product biosynthesis, and here we suggest one effective solution for solving this problem. As maintaining good health in the aging society is becoming increasingly important, we expect that the technology and strategies developed here will play pivotal roles in producing other valuable natural products of medical or nutritional importance,” said Distinguished Professor Sang Yup Lee. This work was supported by the Technology Development Program to Solve Climate Changes on Systems Metabolic Engineering for Biorefineries of the Ministry of Science and ICT (MSIT) through the National Research Foundation (NRF) of Korea and the KAIST Cross-Generation Collaborative Lab project; Sang Yup Lee and Dongsoo Yang were also supported by Novo Nordisk Foundation in Denmark. Publication: Dongsoo Yang, Woo Dae Jang, and Sang Yup Lee. Production of carminic acid by metabolically engineered Escherichia coli. at the Journal of the American Chemical Society. https://doi.org.10.1021/jacs.0c12406 Profile: Sang Yup Lee, PhD Distinguished Professor leesy@kaist.ac.kr http://mbel.kaist.ac.kr Metabolic &Biomolecular Engineering National Research Laboratory Department of Chemical and Biomolecular Engineering KAIST