Kaist

Korean

Students News

-

Technology Detecting RNase Activity

< Ph.D. candidate Chang Yeol Lee > A KAIST research team of Professor Hyun Gyu Park at Department of Chemical and Biomolecular Engineering developed a new technology to detect the activity of RNase H, a RNA degrading enzyme. The team used highly efficient signal amplification reaction termed catalytic hairpin assembly (CHA) to effectively analyze the RNase H activity. Considering that RNase H is required in the proliferation of retroviruses such as HIV, this research finding could contribute to AIDS treatments in the future, researchers say. This study led by Ph.D. candidates Chang Yeol Lee and Hyowon Jang was chosen as the cover for Nanoscale (Issue 42, 2017) published in 14 November. The existing techniques to detect RNase H require expensive fluorophore and quencher, and involve complex implementation. Further, there is no way to amplify the signal, leading to low detection efficiency overall. The team utilized CHA technology to overcome these limitations. CHA amplifies detection signal to allow more sensitive RNase H activity assay. The team designed the reaction system so that the product of CHA reaction has G-quadruplex structures, which is suitable to generate fluorescence. By using fluorescent molecules that bind to G-quadruplexes to generate strong fluorescence, the team could develop high performance RNase H detection method that overcomes the limitations of existing techniques. Further, this technology could screen inhibitors of RNase H activity. The team expects that the research finding could contribute to AIDS treatment. AIDS is disease caused by HIV, a retrovirus that utilizes reverse transcription, during which RNA is converted to DNA. RNase H is essential for reverse transcription in HIV, and thus inhibition of RNase H could in turn inhibit transcription of HIV DNA. Professor Park said, “This technology is applicable to detect various enzyme activities, as well as RNase H activity.” He continued, “I hope this technology could be widely used in research on enzyme related diseases.” This study was funded by Global Frontier project and Mid-career Researcher Support project of the Ministry of Science and ICT.

-

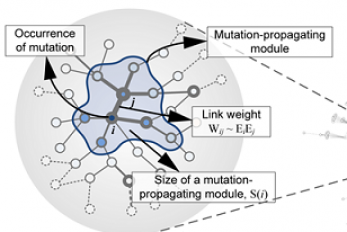

Mutant Gene Network in Colon Cancer Identified

The principles of the gene network for colon tumorigenesis have been identified by a KAIST research team. The principles will be used to find the molecular target for effective anti-cancer drugs in the future. Further, this research gained attention for using a systems biology approach, which is an integrated research area of IT and BT. The KAIST research team led by Professor Kwang-Hyun Cho for the Department of Bio and Brain Engineering succeeded in the identification. Conducted by Dr. Dongkwan Shin and student researchers Jonghoon Lee and Jeong-Ryeol Gong, the research was published in Nature Communications online on November 2. Human cancer is caused by genetic mutations. The frequency of the mutations differs by the type of cancer; for example, only around 10 mutations are found in leukemia and childhood cancer, but an average of 50 mutations are found in adult solid cancers and even hundreds of mutations are found in cancers due to external factors, such as with lung cancer. Cancer researchers around the world are working to identify frequently found genetic mutations in patients, and in turn identify important cancer-inducing genes (called ‘driver genes’) to develop targets for anti-cancer drugs. However, gene mutations not only affect their own functions but also affect other genes through interactions. Therefore, there are limitations in current treatments targeting a few cancer-inducing genes without further knowledge on gene networks, hence current drugs are only effective in a few patients and often induce drug resistance. Professor Cho’s team used large-scale genomic data from cancer patients to construct a mathematical model on the cooperative effects of multiple genetic mutations found in gene interaction networks. The basis of the model construction was The Cancer Genome Atlas (TCGA) presented at the International Cancer Genome Consortium. The team successfully quantified the effects of mutations in gene networks to group colon cancer patients by clinical characteristics. Further, the critical transition phenomenon that occurs in tumorigenesis was identified using large-scale computer simulation analysis, which was the first hidden gene network principle to be identified. Critical transition is the phenomenon in which the state of matter is suddenly changed through phase transition. It was not possible to identify the presence of transition phenomenon in the past, as it was difficult to track the sequence of gene mutations during tumorigenesis. The research team used a systems biology-based research method to find that colon cancer tumorigenesis shows a critical transition phenomenon if the known driver gene mutations follow sequentially. Using the developed mathematical model, it can be possible to develop a new anti-cancer targeting drug that most effectively inhibits the effects of many gene mutations found in cancer patients. In particular, not only driver genes, but also other passenger genes affected by the gene mutations, could be evaluated to find the most effective drug targets. Professor Cho said, “Little was known about the contribution of many gene mutations during tumorigenesis.” He continued, “In this research, a systems biology approach identified the principle of gene networks for the first time to suggest the possibility of anti-cancer drug target identification from a new perspective.” This research was funded by the Ministry of Science and ICT and the National Research Foundation of Korea. < Figure1. Formation of giant clusters via mutation propagation > < Figure2. Critical transition phenomenon by cooperative effect of mutations in tumorigenesis >

-

In Jin Cho Earned the Best Poster Prize at ME Summ..

In Jin Cho, a Ph.D. student in the Department of Chemical and Biomolecular Engineering at KAIST received the best poster prize at the International Metabolic Engineering Summit 2017 held on October 24 in Beijing, China. The International Metabolic Engineering Summit is a global conference where scientists and corporate researchers in the field of metabolic engineering present their latest research outcomes and build networks. At this year’s summit, about 500 researchers from around the world participated in active academic exchanges, including giving keynote speeches and presenting posters. During the poster session, the summit selects one person for the KeAi-synthetic and Systems Biotechnology Poster Award, two for Microbial Cell Factories Poster Awards, and three for Biotechnology Journal Poster Awards among the posters presented by graduate students, post-doctoral fellows and researchers. Cho received the KeAi-synthetic and Systems Biotechnology Poster Award. Her winning poster is on the biotransformation of p-xylene to terephthalic acid using engineered Escherichia coli. Terephthalic acid is generally produced by p-xylene oxidation; however, this process requires a high temperature and pressure as well as a toxic catalyst during the reaction process. Cho and Ziwei Luo, a Ph.D. student at KAIST, co-conducted the research and developed a successful biological conversion process. Compared to the existing chemical process, it does not require a high temperature and pressure; and it is environmentally friendly with a relatively high conversion rate of approximately 97%. Cho’s advisor, Distinguished Professor Sang Yup Lee said, “Further research on glucose-derived terephthalic acid will enable us to produce biomass-based eco-friendly terephthalic acid through engineered Escherichia coli.”

-

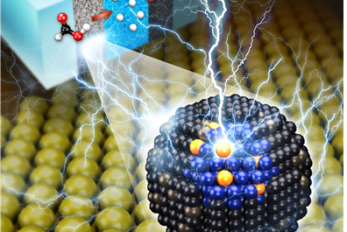

Platinum Single Atom Catalysts for ‘Direct Formic ..

〈 Professor Hyunjoo Lee (left) and Ph.D. candidate Jiwhan Kim 〉 A research team co-led by Professor Hyunjoo Lee at the Department of Chemical and Biomolecular Engineering at KAIST and Professor Jeong Woo Han from the University of Seoul synthesized highly stable high-Pt-content single atom catalysts for direct formic acid fuel cells. The amount of platinum can be reduced to 1/10 of that of conventional platinum nanoparticle catalysts. Platinum (Pt) catalysts have been used in various catalytic reactions due to their high activity and stability. However, because Pt is rare and expensive, it is important to reduce the amount of Pt used. Pt single atom catalysts can reduce the size of the Pt particles to the size of an atom. Thus, the cost of Pt catalysts can be minimized because all of the Pt atoms can participate in the catalytic reactions. Additionally, single atom catalysts have no ensemble site in which two or more atoms are attached, and thus, the reaction selectivity is different from that of nanoparticle catalysts. Despite these advantages, single atom catalysts are easily aggregated and less stable due to their low coordination number and high surface free energy. It is difficult to develop a single atom catalyst with high content and high stability, and thus, its application in practical devices is limited. Direct formic acid fuel cells can be an energy source for next-generation portable devices because liquid formic acid as a fuel is safer and easier to store and transport than high-pressure hydrogen gas. To improve the stability of Pt single atom catalysts, Professor Lee’s group developed a Pt-Sn single atom alloy structure on an antimony-doped tin oxide (ATO) support. This structure has been proven by computational calculations which show that Pt single atoms substitute antimony sites in the antimony-tin alloy structure and are thermodynamically stable. This catalyst has been shown to have a higher activity up to 50 times per weight of Pt than that of the commercial catalyst, Pt/C, in the oxidation of formic acid, and the stability of the catalyst was also remarkably high. Professor Lee’s group also used a single atomic catalyst in a 'direct formic acid fuel cell’ consisting of membranes and electrodes. It is the first attempt to apply a single atomic catalyst to a full cell. In this case, an output similar to that of the commercial catalyst could be obtained by using 1/10 of the platinum compared to the commercial Pt/C catalyst. Ph.D. candidate Jiwhan Kim from KAIST was the first author of the research. This research was published online on September 11 in Advanced Energy Materials. This research was carried out with the support of the Samsung Electronics Future Technology Development Center. < Figure 1. Concept photograph for Pt single atom catalysts. > < Figure 2. Pt single atom catalysts by HAADF-STEM analysis (bright white circles) >

-

Ultra-Fast and Ultra-Sensitive Hydrogen Sensor

A KAIST team made an ultra-fast hydrogen sensor that can detect hydrogen gas levels under 1% in less than seven seconds. The sensor also can detect hundreds of parts per million levels of hydrogen gas within 60 seconds at room temperature. A research group under Professor Il-Doo Kim in the Department of Materials Science and Engineering at KAIST, in collaboration with Professor Reginald M. Penner of the University of California-Irvine, has developed an ultra-fast hydrogen gas detection system based on a palladium (Pd) nanowire array coated with a metal-organic framework (MOF). Hydrogen has been regarded as an eco-friendly next-generation energy source. However, it is a flammable gas that can explode even with a small spark. For safety, the lower explosion limit for hydrogen gas is 4 vol% so sensors should be able to detect the colorless and odorless hydrogen molecule quickly. The importance of sensors capable of rapidly detecting colorless and odorless hydrogen gas has been emphasized in recent guidelines issued by the U.S. Department of Energy. According to the guidelines, hydrogen sensors should detect 1 vol% of hydrogen in air in less than 60 seconds for adequate response and recovery times. To overcome the limitations of Pd-based hydrogen sensors, the research team introduced a MOF layer on top of a Pd nanowire array. Lithographically patterned Pd nanowires were simply overcoated with a Zn-based zeolite imidazole framework (ZIF-8) layer composed of Zn ions and organic ligands. ZIF-8 film is easily coated on Pd nanowires by simple dipping (for 2?6 hours) in a methanol solution including Zn (NO3)2·6H2O and 2-methylimidazole. < This cover image depicts lithographically-patterned Pd nanowires overcoated with a Zn-based zeolite imidazole framework (ZIF-8) layer. > As synthesized ZIF-8 is a highly porous material composed of a number of micro-pores of 0.34 nm and 1.16 nm, hydrogen gas with a kinetic diameter of 0.289 nm can easily penetrate inside the ZIF-8 membrane, while large molecules (> 0.34 nm) are effectively screened by the MOF filter. Thus, the ZIF-8 filter on the Pd nanowires allows the predominant penetration of hydrogen molecules, leading to the acceleration of Pd-based H2 sensors with a 20-fold faster recovery and response speed compared to pristine Pd nanowires at room temperature. Professor Kim expects that the ultra-fast hydrogen sensor can be useful for the prevention of explosion accidents caused by the leakage of hydrogen gas. In addition, he expects that other harmful gases in the air can be accurately detected through effective nano-filtration by using of a variety of MOF layers. This study was carried out by Ph.D. candidate Won-Tae Koo (first author), Professor Kim (co-corresponding author), and Professor Penner (co-corresponding author). The study has been published in the online edition of ACS Nano, as the cover-featured image for the September issue. < Figure 1. Representative image for this paper published in ACS Nano, August, 18. > < Figure 2. Images of Pd nanowire array-based hydrogen sensors, scanning electron microscopy image of a Pd nanowire covered by a metal-organic framework layer, and the hydrogen sensing properties of the sensors. > < Figure 3. Schematic illustration of a metal-organic framework (MOF). The MOF, consisting of metal ions and organic ligands, is a highly porous material with an ultrahigh surface area. The various structures of MOFs can be synthesized depending on the kinds of metal ions and organic ligands. > < (From left) Professor Kim, Ph.D. candidate Koo, and Professor Penner >

-

Sangeun Oh Recognized as a 2017 Google Fellow

Sangeun Oh, a Ph.D. candidate in the School of Computing was selected as a Google PhD Fellow in 2017. He is one of 47 awardees of the Google PhD Fellowship in the world. The Google PhD Fellowship awards students showing outstanding performance in the field of computer science and related research. Since being established in 2009, the program has provided various benefits, including scholarships worth $10,000 USD and one-to-one research discussion with mentors from Google. His research work on a mobile system that allows interactions among various kinds of smart devices was recognized in the field of mobile computing. He developed a mobile platform that allows smart devices to share diverse functions, including logins, payments, and sensors. This technology provides numerous user experiences that existing mobile platforms could not offer. Through cross-device functionality sharing, users can utilize multiple smart devices in a more convenient manner. The research was presented at The Annual International Conference on Mobile Systems, Applications, and Services (MobiSys) of the Association for Computing Machinery in July, 2017. Oh said, “I would like to express my gratitude to my advisor, the professors in the School of Computing, and my lab colleagues. I will devote myself to carrying out more research in order to contribute to society.” His advisor, Insik Shin, a professor in the School of Computing said, “Being recognized as a Google PhD Fellow is an honor to both the student as well as KAIST. I strongly anticipate and believe that Oh will make the next step by carrying out good quality research.”

-

Semiconductor Patterning of Seven Nanometers Techn..

A research team led by Professor Sang Ouk Kim in the Department of Materials Science and Engineering at KAIST has developed semiconductor manufacturing technology using a camera flash. This technology can manufacture ultra-fine patterns over a large area by irradiating a single flash with a seven-nanometer patterning technique for semiconductors. It can facilitate the manufacturing of highly efficient, integrated semiconductor devices in the future. Technology for the Artificial Intelligence (AI), the Internet of Things (IoTs), and big data, which are the major keys for the fourth Industrial Revolution, require high-capacity, high-performance semiconductor devices. It is necessary to develop lithography technology to produce such next-generation, highly integrated semiconductor devices. Although related industries have been using conventional photolithography for small patterns, this technique has limitations for forming a pattern of sub-10 nm patterns.? Molecular assembly patterning technology using polymers has been in the spotlight as the next generation technology to replace photolithography because it is inexpensive to produce and can easily form sub-10 nm patterns. However, since it generally takes a long time for heat treatment at high-temperature or toxic solvent vapor treatment, mass production is difficult and thus its commercialization has been limited. The research team introduced a camera flash that instantly emits strong light to solve the issues of polymer molecular assembly patterning. Using a flash can possibly achieve a semiconductor patterning of seven nanometers within 15 milliseconds (1 millisecond = 1/1,000 second), which can generate a temperature of several hundred degrees Celsius in several tens of milliseconds. The team has demonstrated that applying this technology to polymer molecular assembly allows a single flash of light to form molecular assembly patterns. The team also identified its compatibility with polymer flexible substrates, which are impossible to process at high temperatures. Through these findings, the technology can be applied to the fabrication of next-generation, flexible semiconductors. The researchers said the camera flash photo-thermal process will be introduced into molecular assembly technology and this highly-efficiency technology can accelerate the realization of molecular assembly semiconductor technology. Professor Kim, who led the research, said, “Despite its potential, molecular assembly semiconductor technology has remained a big challenge in improving process efficiency.” “This technology will be a breakthrough for the practical use of molecular assembly-based semiconductors.” The paper was published in the international journal, Advanced Materials on August 21 with first authors, researcher Hyeong Min Jin and PhD candidate Dae Yong Park. The research, sponsored by the Ministry of Science and ICT, was co-led Professor by Keon Jae Lee in the Department of Materials Science and Engineering at KAIST, and Professor Kwang Ho Kim in the School of Materials Science and Engineering at Pusan National University. < 1. Formation of semiconductor patterns using a camera flash> < Schematic diagram of molecular assembly pattern using a camera flash > < Self-assembled patterns>

-

A Novel and Practical Fab-route for Superomniphobi..

(clockwise from left: Jaeho Choi, Hee Tak Kim, Shin-Hyun Kim) A joint research team led by Professor Hee Tak Kim and Shin-Hyun Kim in the Department of Chemical and Biomolecular Engineering at KAIST developed a fabrication technology that can inexpensively produce surfaces capable of repelling liquids, including water and oil. The team used the photofluidization of azobenzene molecule-containing polymers to generate a superomniphobic surface which can be applied for developing stain-free fabrics, non-biofouling medical tubing, and corrosion-free surfaces. Mushroom-shaped surface textures, also called doubly re-entrant structures, are known to be the most effective surface structure that enhances resistance against liquid invasion, thereby exhibiting superior superomniphobic property. However, the existing procedures for their fabrication are highly delicate, time-consuming, and costly. Moreover, the materials required for the fabrication are restricted to an inflexible and expensive silicon wafer, which limits the practical use of the surface. To overcome such limitations, the research team used a different approach to fabricate the re-entrant structures called localized photofludization by using the peculiar optical phenomenon of azobenzene molecule-containing polymers (referred to as azopolymers). It is a phenomenon where an azopolymer becomes fluidized under irradiation, and the fluidization takes place locally within the thin surface layer of the azopolymer. With this novel approach, the team facilitated the localized photofluidization in the top surface layer of azopolymer cylindrical posts, successfully reconfiguring the cylindrical posts to doubly re-entrant geometry while the fluidized thin top surface of an azopolymer is flowing down. The structure developed by the team exhibits a superior superomniphobic property even for liquids infiltrating the surface immediately. Moreover, the superomniphobic property can be maintained on a curved target surface because its surficial materials are based on high molecules. Furthermore, the fabrication procedure of the structure is highly reproducible and scalable, providing a practical route to creating robust omniphobic surfaces. Professor Hee Tak Kim said, “Not only does the novel photo-fluidization technology in this study produce superior superomniphobic surfaces, but it also possesses many practical advantages in terms of fab-procedures and material flexibility; therefore, it could greatly contribute to real uses in diverse applications.” Professor Shin-Hyun Kim added, “The designed doubly re-entrant geometry in this study was inspired by the skin structure of springtails, insects dwelling in soil that breathe through their skin. As I carried out this research, I once again realized that humans can learn from nature to create new engineering designs.” The paper (Jaeho Choi as a first author) was published in ACS Nano, an international journal for Nano-technology, in August. < Schematic diagram of mushroom-shaped structure fabrication > < SEM image of mushroom-shaped structure > < Image of superomniphobic property of different types of liquid >

-

Students from Science Academies Shed a Light on KA..

Recent KAIST statistics show that graduates from science academies distinguish themselves not only by their academic performance at KAIST but also in various professional careers after graduation. Every year, approximately 20% of newly-enrolled students of KAIST are from science academies. In the case of the class of 2017, 170 students from science academies accounted for 22% of the newly-enrolled students. Moreover, they are forming a top-tier student group on campus. As shown in the table below, the ratio of students graduating early for either enrolling in graduate programs or landing a job indicates their excellent performance at KAIST. There are eight science academies in Korea: Korea Science Academy of KAIST located in Busan, Seoul Science High School, Gyeonggi Science High School, Gwangju Science High School, Daejeon Science High School, Sejong Academy of Science and Arts, and Incheon Arts and Sciences Academy. Recently, KAIST analyzed 532 university graduates from the class of 2012. It was found that 23 out of 63 graduates with the alma mater of science academies finished their degree early; as a result, the early graduation ratio of the class of 2012 stood at 36.5%. This percentage was significantly higher than that of students from other high schools. ????? Among the notable graduates, there was a student who made headlines with donation of 30 million KRW to KAIST. His donation was the largest donation from an enrolled student on record. His story goes back when Android smartphones were about to be distributed. Seung-Gyu Oh, then a student in the School of Electrical Engineering felt that existing subway apps were inconvenient, so he invented his own subway app that navigated the nearest subway lines in 2015. His app hit the market and ranked second in the subway app category. It had approximately five million users, which led to it generating advertising revenue. After the successful launch of the app, Oh accepted the takeover offered by Daum Kakao. He then donated 30 million KRW to his alma mater. “Since high school, I’ve always been thinking that I have received many benefits from my country and felt heavily responsible for it,” the alumnus of Korea Science of Academy and KAIST said. “I decided to make a donation to my alma mater, KAIST because I wanted to return what I had received from my country.” After graduation, Oh is now working for the web firm, Daum Kakao. In May 24, 2017, the 41st International Collegiate Programming Contest, hosted by Association for Computing Machinery (ACM) and sponsored by IBM, was held in Rapid City, South Dakota in the US. It is a prestigious contest that has been held annually since 1977. College students from around the world participate in this contest; and in 2017, a total of 50,000 students from 2,900 universities in 104 countries participated in regional competitions, and approximately 400 students made it to the final round, entering into a fierce competition. KAIST students also participated in this contest. The team was comprised of Ji-Hoon Ko, Jong-Won Lee, and Han-Pil Kang from the School of Computing. They are also alumni of Gyeonggi Science High School. They received the ‘First Problem Solver’ award and a bronze medal which came with a 3,000 USD cash prize. Sung-Jin Oh, who also graduated from Korea Science Academy of KAIST, is a research professor at the Korea Institute of Advanced Study (KIAS). He is the youngest recipient of the ‘Young Scientist Award’, which he received by proving a hypothesis from Einstein’s Theory of General Relativity mathematically at the age of 27. After graduating from KAIST, Oh earned his master’s and doctorate degrees from Princeton University, completed his post-doctoral fellow at UC Berkeley, and is now immersing himself in research at KIAS. Heui-Kwang Noh from the Department of Chemistry and Kang-Min Ahn from the School of Computing, who were selected to receive the presidential scholarship for science in 2014, both graduated from Gyeonggi Science High School. Noh was recognized for his outstanding academic capacity and was also chosen for the ‘GE Foundation Scholar-Leaders Program’ in 2015. The ‘GE Foundation Scholar-Leaders Program’, established in 1992 by the GE Foundation, aims at fostering talented students. This program is for post-secondary students who have both creativity and leadership. It selects five outstanding students and provides 3 million KRW per annum for a maximum of three years. The grantees of this program have become influential people in various fields, including professors, executives, staff members of national/international firms, and researchers. And they are making a huge contribution to the development of engineering and science. Noh continues doing various activities, including the completion of his internship at ‘Harvard-MIT Biomedical Optics’ and the publication of a paper (3rd author) for the ACS Omega of American Chemical Society (ACS). Ahn, a member of the Young Engineers Honor Society (YEHS) of the National Academy of Engineering of Korea, had an interest in startup businesses. In 2015, he founded DataStorm, a firm specializing in developing data solution, and merged with a cloud back-office, Jobis & Villains, in 2016. Ahn is continuing his business activities and this year he founded, and is successfully running, cocKorea. “KAIST students whose alma mater are science academies form a top-tier group on campus and produce excellent performance,” said Associate Vice President for Admissions, Hayong Shin. “KAIST is making every effort to assist these students so that they can perform to the best of their ability.” (Clockwise from top left: Seung-Gyu Oh, Sung-Jin Oh, Heui-Kwang Noh and Kang-Min Ahn)

-

KAIST Researchers Receive Three Awards at the 13th..

< From left: Seon Young Park, Dr. So Young Choi, and Yoojin Choi > Researchers in the laboratory of KAIST Distinguished Professor Sang Yup Lee from the Department of Chemical and Biomolecular Engineering swept awards at the 13th Asian Congress on Biotechnology held in Thailand last month. The conference awarded a total of eight prizes in the areas of best research and best poster presentation. This is an exceptional case in which members of one research team received almost half of the awards at an international conference. Dr. So Young Choi received the Best Research Award, while Ph.D. candidates Yoojin Choi and Seon Young Park each received the Best Poster Presentation Award at the conference held in Khon Kaen, Thailand from July 23 to 27. The Asian Congress on Biotechnology is an international conference in which scientists and industry experts in Asia and from around the world gather to present recent research findings in the field of biotechnology. At the conference, around 400 researchers in biotechnology from 25 countries, including Korea, gathered to present and discuss various research findings under the theme of “Bioinnovation and Bioeconomy.” Distinguished Professor Sang Yup Lee attended the conference to give the opening plenary lecture on the topic of ‘Systems Strategies in Biotechnology.’ Professor Lee announced, “I have attended international conferences with students for the last 20 years, but this is the first in which my team received three awards at an international conference that only honors a total of eight awards, three for Best Research and five for Best Presentation.” Dr. Choi presented research results on poly (lactate-co-glycolate) (PLGA) synthesis through a biological method using micro-organisms and received the Best Research Award. PLGA is a random copolymer of DL-lactic and glycolic acids and is a biopolymer widely used for biomedical applications. PLGA is biodegradable, biocompatible, and nontoxic, and thus has been approved by the US Food and Drug Administration (FDA) for its use in implants, drug delivery, and sutures. Dr. Choi’s research was deemed to be innovative for synthesizing PLGA from glucose and xylose in cells through metabolic engineering of E.Coli. Dr. Choi received her Ph.D. under the supervision of Distinguished Professor Lee this February and is currently conducting post-doc research. Ph.D. candidate Choi presented her research on the use of recombinant E.Coli for the biological synthesis of various nanoparticles and received the Best Poster Presentation award. Choi used recombinant E.Coli-expressing proteins and peptides that adsorb to heavy metals to biologically synthesize diverse metal nanoparticles such as single-nanoparticle including gold and silver, quantum dots, and magnetic nanoparticles for the first time. The synthesized nanoparticles can be used in the fields of bio-imaging, diagnosis, environment, and energy. Ph.D. candidate Park, who also received the Best Poster Presentation award, synthesized and increased production of astanxanthin, a strong antioxidant found in nature, in E.Coli using metabolic engineering. Astanxanthin is a carotenoid pigment found in salmon and shrimp that widely used in health products and cosmetics.

-

Multi-Device Mobile Platform for App Functionality..

Case 1. Mr. Kim, an employee, logged on to his SNS account using a tablet PC at the airport while traveling overseas. However, a malicious virus was installed on the tablet PC and some photos posted on his SNS were deleted by someone else. Case 2. Mr. and Mrs. Brown are busy contacting credit card and game companies, because his son, who likes games, purchased a million dollars worth of game items using his smartphone. Case 3. Mr. Park, who enjoys games, bought a sensor-based racing game through his tablet PC. However, he could not enjoy the racing game on his tablet because it was not comfortable to tilt the device for game control. The above cases are some of the various problems that can arise in modern society where diverse smart devices, including smartphones, exist. Recently, new technology has been developed to easily solve these problems. Professor Insik Shin from the School of Computing has developed ‘Mobile Plus,’ which is a mobile platform that can share the functionalities of applications between smart devices. This is a novel technology that allows applications to easily share their functionalities without needing any modifications. Smartphone users often use Facebook to log in to another SNS account like Instagram, or use a gallery app to post some photos on their SNS. These examples are possible, because the applications share their login and photo management functionalities. The functionality sharing enables users to utilize smartphones in various and convenient ways and allows app developers to easily create applications. However, current mobile platforms such as Android or iOS only support functionality sharing within a single mobile device. It is burdensome for both developers and users to share functionalities across devices because developers would need to create more complex applications and users would need to install the applications on each device. To address this problem, Professor Shin’s research team developed platform technology to support functionality sharing between devices. The main concept is using virtualization to give the illusion that the applications running on separate devices are on a single device. They succeeded in this virtualization by extending a RPC (Remote Procedure Call) scheme to multi-device environments. This virtualization technology enables the existing applications to share their functionalities without needing any modifications, regardless of the type of applications. So users can now use them without additional purchases or updates. Mobile Plus can support hardware functionalities like cameras, microphones, and GPS as well as application functionalities such as logins, payments, and photo sharing. Its greatest advantage is its wide range of possible applications. Professor Shin said, "Mobile Plus is expected to have great synergy with smart home and smart car technologies. It can provide novel user experiences (UXs) so that users can easily utilize various applications of smart home/vehicle infotainment systems by using a smartphone as their hub." This research was published at ACM MobiSys, an international conference on mobile computing that was hosted in the United States on June 21. < Figure1. Users can securely log on to SNS accounts by using their personal devices > < Figure 2. Parents can control impulse shopping of their children. > < Figure 3. Users can enjoy games more and more by using the smartphone as a controller >

-

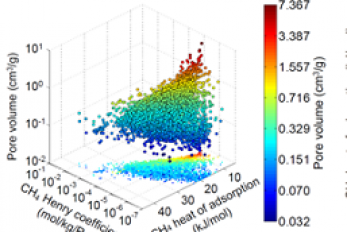

Analysis of Gas Adsorption Properties for Amorphou..

Professor Jihan Kim from the Department of Chemical and Biomolecular Engineering at KAIST has developed a method to predict gas adsorption properties of amorphous porous materials. Metal-organic frameworks (MOFs) have large surface area and high density of pores, making them appropriate for various energy and environmental-related applications. And although most MOFs are crystalline, these structures can deform during synthesis and/or industrial processes, leading to loss in long-range order. Unfortunately, without the structural information, existing computer simulation techniques cannot be used to model these materials. In this research, Professor Kim’s research team demonstrated that one can replace the material properties of structurally deformed MOFs with those of crystalline MOFs to indirectly analyze/model the material properties of amorphous materials. First, the team conducted simulations on methane gas adsorption properties for over 12,000 crystalline MOFs to obtain a large training set data, and created a resulting structure-property map. Upon mapping the experimental data of amorphous MOFs onto the structure-property map, results showed that regardless of crystallinity, the gas adsorption properties of MOFs showed congruence and consistency amongst one another. Based on these findings, selected crystalline MOFs with the most similar gas adsorption properties as the collapsed structure from the 12,000 candidates. Then, the team verified that the adsorption properties of these similar MOFs can be successfully transferred to the deformed MOFs across different temperatures and even to different gas molecules (e.g. hydrogen), demonstrating transferability of properties. These findings allow material property prediction in porous materials such as MOFs without structural information, and the techniques here can be used to better predict and understand optimal materials for various applications including, carbon dioxide capture, gas storage and separations. This research was conducted in collaboration with Professor Dae-Woon Lim at Kyoto University, Professor Myunghyun Paik at Seoul National University, Professor Minyoung Yoon at Gachon University, and Aadesh Harale at Saudi Arabian Oil Company. The research was published in the Proceedings of the National Academy of Sciences (PNAS) online on 10 July and the co-first authors were Ph. D. candidate WooSeok Jeong and Professor Dae-Woon Lim. This research was funded by the Saudi Aramco-KAIST CO2 Management Center. < Figure 1. Trends in structure - material property map and in collapsed structures > < Figure 2. Transferability between the experimental results of collapsed MOFs and the simulation results of crystalline MOFs >