Kaist

Korean

Students News

-

E. coli Engineered to Grow on CO₂ and Formic Acid ..

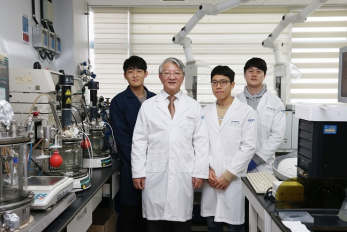

- An E. coli strain that can grow to a relatively high cell density solely on CO₂ and formic acid was developed by employing metabolic engineering. - < From left Jong An Lee, Distinguished Professor Sang Yup Lee, Dr. Junho Bang, Dr.Jung Ho Ahn. > Most biorefinery processes have relied on the use of biomass as a raw material for the production of chemicals and materials. Even though the use of CO₂ as a carbon source in biorefineries is desirable, it has not been possible to make common microbial strains such as E. coli grow on CO₂. Now, a metabolic engineering research group at KAIST has developed a strategy to grow an E. coli strain to higher cell density solely on CO₂ and formic acid. Formic acid is a one carbon carboxylic acid, and can be easily produced from CO₂ using a variety of methods. Since it is easier to store and transport than CO₂, formic acid can be considered a good liquid-form alternative of CO₂. With support from the C1 Gas Refinery R&D Center and the Ministry of Science and ICT, a research team led by Distinguished Professor Sang Yup Lee stepped up their work to develop an engineered E. coli strain capable of growing up to 11-fold higher cell density than those previously reported, using CO₂ and formic acid as sole carbon sources. This work was published in Nature Microbiology on September 28. Despite the recent reports by several research groups on the development of E. coli strains capable of growing on CO₂ and formic acid, the maximum cell growth remained too low (optical density of around 1) and thus the production of chemicals from CO₂ and formic acid has been far from realized. The team previously reported the reconstruction of the tetrahydrofolate cycle and reverse glycine cleavage pathway to construct an engineered E. coli strain that can sustain growth on CO₂ and formic acid. To further enhance the growth, the research team introduced the previously designed synthetic CO₂ and formic acid assimilation pathway, and two formate dehydrogenases. Metabolic fluxes were also fine-tuned, the gluconeogenic flux enhanced, and the levels of cytochrome bo3 and bd-I ubiquinol oxidase for ATP generation were optimized. This engineered E. coli strain was able to grow to a relatively high OD600 of 7~11, showing promise as a platform strain growing solely on CO₂ and formic acid. Professor Lee said, “We engineered E. coli that can grow to a higher cell density only using CO₂ and formic acid. We think that this is an important step forward, but this is not the end. The engineered strain we developed still needs further engineering so that it can grow faster to a much higher density.” Professor Lee’s team is continuing to develop such a strain. “In the future, we would be delighted to see the production of chemicals from an engineered E. coli strain using CO₂ and formic acid as sole carbon sources,” he added. < Figure: Metabolic engineering strategies and central metabolic pathways of the engineered E. coli strain that grows on CO2 and formic acid. Carbon assimilation and reducing power regeneration pathways are described. Engineering strategies and genetic modifications employed in the engineered strain are also described. Figure from Nature Microbiology. > Profile: Distinguished Professor Sang Yup Lee leesy@kaist.ac.kr http://mbel.kaist.ac.kr Department of Chemical and Biomolecular Engineering KAIST

-

Taesik Gong Named Google PhD Fellow

< PhD Candidate Taesik Gong > PhD candidate Taesik Gong from the School of Computing was named a 2020 Google PhD Fellow in the field of machine learning. The Google PhD Fellowship Program has recognized and supported outstanding graduate students in computer science and related fields since 2009. Gong is one of two Korean students chosen as the recipients of Google Fellowships this year. A total of 53 students across the world in 12 fields were awarded this fellowship. Gong’s research on condition-independent mobile sensing powered by machine learning earned him this year’s fellowship. He has published and presented his work through many conferences including ACM SenSys and ACM UbiComp, and has worked at Microsoft Research Asia and Nokia Bell Labs as a research intern. Gong was also the winner of the NAVER PhD Fellowship Award in 2018. (END)

-

Sturdy Fabric-Based Piezoelectric Energy Harvester..

KAIST researchers presented a highly flexible but sturdy wearable piezoelectric harvester using the simple and easy fabrication process of hot pressing and tape casting. This energy harvester, which has record high interfacial adhesion strength, will take us one step closer to being able to manufacture embedded wearable electronics. A research team led by Professor Seungbum Hong said that the novelty of this result lies in its simplicity, applicability, durability, and its new characterization of wearable electronic devices. Wearable devices are increasingly being used in a wide array of applications from small electronics to embedded devices such as sensors, actuators, displays, and energy harvesters. Despite their many advantages, high costs and complex fabrication processes remained challenges for reaching commercialization. In addition, their durability was frequently questioned. To address these issues, Professor Hong’s team developed a new fabrication process and analysis technology for testing the mechanical properties of affordable wearable devices. For this process, the research team used a hot pressing and tape casting procedure to connect the fabric structures of polyester and a polymer film. Hot pressing has usually been used when making batteries and fuel cells due to its high adhesiveness. Above all, the process takes only two to three minutes. The newly developed fabrication process will enable the direct application of a device into general garments using hot pressing just as graphic patches can be attached to garments using a heat press. In particular, when the polymer film is hot pressed onto a fabric below its crystallization temperature, it transforms into an amorphous state. In this state, it compactly attaches to the concave surface of the fabric and infiltrates into the gaps between the transverse wefts and longitudinal warps. These features result in high interfacial adhesion strength. For this reason, hot pressing has the potential to reduce the cost of fabrication through the direct application of fabric-based wearable devices to common garments. In addition to the conventional durability test of bending cycles, the newly introduced surface and interfacial cutting analysis system proved the high mechanical durability of the fabric-based wearable device by measuring the high interfacial adhesion strength between the fabric and the polymer film. Professor Hong said the study lays a new foundation for the manufacturing process and analysis of wearable devices using fabrics and polymers. He added that his team first used the surface and interfacial cutting analysis system (SAICAS) in the field of wearable electronics to test the mechanical properties of polymer-based wearable devices. Their surface and interfacial cutting analysis system is more precise than conventional methods (peel test, tape test, and microstretch test) because it qualitatively and quantitatively measures the adhesion strength. Professor Hong explained, “This study could enable the commercialization of highly durable wearable devices based on the analysis of their interfacial adhesion strength. Our study lays a new foundation for the manufacturing process and analysis of other devices using fabrics and polymers. We look forward to fabric-based wearable electronics hitting the market very soon.” The results of this study were registered as a domestic patent in Korea last year, and published in Nano Energy this month. This study has been conducted through collaboration with Professor Yong Min Lee in the Department of Energy Science and Engineering at DGIST, Professor Kwangsoo No in the Department of Materials Science and Engineering at KAIST, and Professor Seunghwa Ryu in the Department of Mechanical Engineering at KAIST. This study was supported by the High-Risk High-Return Project and the Global Singularity Research Project at KAIST, the National Research Foundation, and the Ministry of Science and ICT in Korea. < Figure 1. Fabrication process, structures, and output signals of a fabric-based wearable energy harvester. > < Figure 2. Measurement of an interfacial adhesion strength using SAICAS > -Publication: Jaegyu Kim, Seoungwoo Byun, Sangryun Lee, Jeongjae Ryu, Seongwoo Cho, Chungik Oh, Hongjun Kim, Kwangsoo No, Seunghwa Ryu, Yong Min Lee, Seungbum Hong*, Nano Energy 75 (2020), 104992. https://doi.org/10.1016/j.nanoen.2020.104992 -Profile: Professor Seungbum Hong seungbum@kaist.ac.kr http://mii.kaist.ac.kr/ Department of Materials Science and Engineering KAIST

-

Before Eyes Open, They Get Ready to See

- Spontaneous retinal waves can generate long-range horizontal connectivity in visual cortex. - A KAIST research team’s computational simulations demonstrated that the waves of spontaneous neural activity in the retinas of still-closed eyes in mammals develop long-range horizontal connections in the visual cortex during early developmental stages. This new finding featured in the August 19 edition of Journal of Neuroscience as a cover article has resolved a long-standing puzzle for understanding visual neuroscience regarding the early organization of functional architectures in the mammalian visual cortex before eye-opening, especially the long-range horizontal connectivity known as “feature-specific” circuitry. To prepare the animal to see when its eyes open, neural circuits in the brain’s visual system must begin developing earlier. However, the proper development of many brain regions involved in vision generally requires sensory input through the eyes. In the primary visual cortex of the higher mammalian taxa, cortical neurons of similar functional tuning to a visual feature are linked together by long-range horizontal circuits that play a crucial role in visual information processing. Surprisingly, these long-range horizontal connections in the primary visual cortex of higher mammals emerge before the onset of sensory experience, and the mechanism underlying this phenomenon has remained elusive. To investigate this mechanism, a group of researchers led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering at KAIST implemented computational simulations of early visual pathways using data obtained from the retinal circuits in young animals before eye-opening, including cats, monkeys, and mice. From these simulations, the researchers found that spontaneous waves propagating in ON and OFF retinal mosaics can initialize the wiring of long-range horizontal connections by selectively co-activating cortical neurons of similar functional tuning, whereas equivalent random activities cannot induce such organizations. The simulations also showed that emerged long-range horizontal connections can induce the patterned cortical activities, matching the topography of underlying functional maps even in salt-and-pepper type organizations observed in rodents. This result implies that the model developed by Professor Paik and his group can provide a universal principle for the developmental mechanism of long-range horizontal connections in both higher mammals as well as rodents. Professor Paik said, “Our model provides a deeper understanding of how the functional architectures in the visual cortex can originate from the spatial organization of the periphery, without sensory experience during early developmental periods.” He continued, “We believe that our findings will be of great interest to scientists working in a wide range of fields such as neuroscience, vision science, and developmental biology.” This work was supported by the National Research Foundation of Korea (NRF). Undergraduate student Jinwoo Kim participated in this research project and presented the findings as the lead author as part of the Undergraduate Research Participation (URP) Program at KAIST. < Figure 1. Computational simulation of retinal waves in model neural networks > < Figure 2. Spontaneous retinal wave and long-range horizontal connections > < Figure 3. Illustration of the retinal waves and their projection to the visual cortex leading to the development of long-range horizontal connections > < Image. Journal Cover Image tinal waves in model neural networks > Figures and image credit: Professor Se-Bum Paik, KAIST Image usage restrictions: News organizations may use or redistribute these figures and image, with proper attribution, as part of news coverage of this paper only. Publication: Jinwoo Kim, Min Song, and Se-Bum Paik. (2020). Spontaneous retinal waves generate long-range horizontal connectivity in visual cortex. Journal of Neuroscience, Available online at https://www.jneurosci.org/content/early/2020/07/17/JNEUROSCI.0649-20.2020 Profile: Se-Bum Paik Assistant Professor sbpaik@kaist.ac.kr http://vs.kaist.ac.kr/ VSNN Laboratory Department of Bio and Brain Engineering Program of Brain and Cognitive Engineering http://kaist.ac.kr Korea Advanced Institute of Science and Technology (KAIST) Daejeon, Republic of Korea Profile: Jinwoo Kim Undergraduate Student bugkjw@kaist.ac.kr Department of Bio and Brain Engineering, KAIST Profile: Min Song Ph.D. Candidate night@kaist.ac.kr Program of Brain and Cognitive Engineering, KAIST (END)

-

Deep Learning-Based Cough Recognition Model Helps ..

The Center for Noise and Vibration Control at KAIST announced that their coughing detection camera recognizes where coughing happens, visualizing the locations. The resulting cough recognition camera can track and record information about the person who coughed, their location, and the number of coughs on a real-time basis. Professor Yong-Hwa Park from the Department of Mechanical Engineering developed a deep learning-based cough recognition model to classify a coughing sound in real time. The coughing event classification model is combined with a sound camera that visualizes their locations in public places. The research team said they achieved a best test accuracy of 87.4 %. Professor Park said that it will be useful medical equipment during epidemics in public places such as schools, offices, and restaurants, and to constantly monitor patients’ conditions in a hospital room. Fever and coughing are the most relevant respiratory disease symptoms, among which fever can be recognized remotely using thermal cameras. This new technology is expected to be very helpful for detecting epidemic transmissions in a non-contact way. The cough event classification model is combined with a sound camera that visualizes the cough event and indicates the location in the video image. To develop a cough recognition model, a supervised learning was conducted with a convolutional neural network (CNN). The model performs binary classification with an input of a one-second sound profile feature, generating output to be either a cough event or something else. In the training and evaluation, various datasets were collected from Audioset, DEMAND, ETSI, and TIMIT. Coughing and others sounds were extracted from Audioset, and the rest of the datasets were used as background noises for data augmentation so that this model could be generalized for various background noises in public places. The dataset was augmented by mixing coughing sounds and other sounds from Audioset and background noises with the ratio of 0.15 to 0.75, then the overall volume was adjusted to 0.25 to 1.0 times to generalize the model for various distances. The training and evaluation datasets were constructed by dividing the augmented dataset by 9:1, and the test dataset was recorded separately in a real office environment. In the optimization procedure of the network model, training was conducted with various combinations of five acoustic features including spectrogram, Mel-scaled spectrogram and Mel-frequency cepstrum coefficients with seven optimizers. The performance of each combination was compared with the test dataset. The best test accuracy of 87.4% was achieved with Mel-scaled Spectrogram as the acoustic feature and ASGD as the optimizer. The trained cough recognition model was combined with a sound camera. The sound camera is composed of a microphone array and a camera module. A beamforming process is applied to a collected set of acoustic data to find out the direction of incoming sound source. The integrated cough recognition model determines whether the sound is cough or not. If it is, the location of cough is visualized as a contour image with a ‘cough’ label at the location of the coughing sound source in a video image. A pilot test of the cough recognition camera in an office environment shows that it successfully distinguishes cough events and other events even in a noisy environment. In addition, it can track the location of the person who coughed and count the number of coughs in real time. The performance will be improved further with additional training data obtained from other real environments such as hospitals and classrooms. Professor Park said, “In a pandemic situation like we are experiencing with COVID-19, a cough detection camera can contribute to the prevention and early detection of epidemics in public places. Especially when applied to a hospital room, the patient's condition can be tracked 24 hours a day and support more accurate diagnoses while reducing the effort of the medical staff." This study was conducted in collaboration with SM Instruments Inc. < Figure 1. Architecture of the cough recognition model based on CNN. > < Figure 2. Examples of sound features used to train the cough recognition model. > < Figure 3. Cough detection camera and its signal processing block diagram. >

-

‘SoundWear’ a Heads-Up Sound Augmentation Gadget H..

In this digital era, there has been growing concern that children spend most of their playtime watching TV, playing computer games, and staring at mobile phones with ‘head-down’ posture even outdoors. To counter such concerns, KAIST researchers designed a wearable bracelet using sound augmentation to leverage play benefits by employing digital technology. The research team also investigated how sound influences children’s play experiences according to their physical, social, and imaginative aspects. Playing is a large part of enjoyable and rewarding lives, especially for children. Previously, a large part of children’s playtime used to take place outdoors, and playing outdoors has long been praised for playing an essential role in providing opportunities to perform physical activity, improve social skills, and boost imaginative thinking. Motivated by these concerns, a KAIST research team led by Professor Woohun Lee and his researcher Jiwoo Hong from the Department of Industrial Design made use of sound augmentation, which is beneficial for motivating playful experiences by facilitating imagination and enhancing social awareness with its ambient and omnidirectional characteristics. Despite the beneficial characteristics of sound augmentation, only a few studies have explored sound interaction as a technology to augment outdoor play due to its abstractness when conveying information in an open space outdoors. There is also a lack of empirical evidence regarding its effect on children's play experiences. Professor Lee’s team designed and implemented an original bracelet-type wearable device called SoundWear. This device uses non-speech sound as a core digital feature for children t o broaden their imaginations and improvise their outdoor games. < Figure 1: Four phases of the SoundWear user scenario: (A) exploration, (B) selection, (C) sonification, and (D) transmission > < Figure 1: Four phases of the SoundWear user scenario: (A) exploration, (B) selection, (C) sonification, and (D) transmission > Children equipped with SoundWear were allowed to explore multiple sounds (i.e., everyday and instrumental sounds) on SoundPalette, pick a desired sound, generate the sound with a swinging movement, and transfer the sound between multiple devices for their outdoor play. Both the quantitative and qualitative results of a user study indicated that augmenting playtime with everyday sounds triggered children’s imagination and resulted in distinct play behaviors, whereas instrumental sounds were transparently integrated with existing outdoor games while fully preserving play benefits in physical, social, and imaginative ways. The team also found that the gestural interaction of SoundWear and the free sound choice on SoundPalette helped children to gain a sense of achievement and ownership toward sound. This led children to be physically and socially active while playing. PhD candidate Hong said, “Our work can encourage the discussion on using digital technology that entails sound augmentation and gestural interactions for understanding and cultivating creative improvisations, social pretenses, and ownership of digital materials in digitally augmented play experiences.” Professor Lee also envisioned that the findings being helpful to parents and educators saying, “I hope the verified effect of digital technology on children’s play informs parents and educators to help them make more informed decisions and incorporate the playful and creative usage of new media, such as mobile phones and smart toys, for young children.” This research titled “SoundWear: Effect of Non-speech Sound Augmentation on the Outdoor Play Experience of Children” was presented at DIS 2020 (the ACM Conference on Designing Interactive Systems) taking place virtually in Eindhoven, Netherlands, from July 6 to 20. This work received an Honorable Mention Award for being in the top 5% of all the submissions to the conference. < Figure 2. Differences in social interaction, physical activity, and imaginative utterances under the condition of baseline, everyday sound, and instrumental sound > Link to download the full-text paper: https://files.cargocollective.com/698535/disfp9072-hongA.pdf -Profile: Professor Woohun Lee woohun.lee@kaist.ac.kr http://wonderlab.kaist.ac.kr Department of Industrial Design (ID) KAIST

-

Atomic Force Microscopy Reveals Nanoscale Dental E..

< Professor Seungbum Hong (left) and Dr. Chungik Oh (right) > KAIST researchers used atomic force microscopy to quantitatively evaluate how acidic and sugary drinks affect human tooth enamel at the nanoscale level. This novel approach is useful for measuring mechanical and morphological changes that occur over time during enamel erosion induced by beverages. Enamel is the hard-white substance that forms the outer part of a tooth. It is the hardest substance in the human body, even stronger than bone. Its resilient surface is 96 percent mineral, the highest percentage of any body tissue, making it durable and damage-resistant. The enamel acts as a barrier to protect the soft inner layers of the tooth, but can become susceptible to degradation by acids and sugars. Enamel erosion occurs when the tooth enamel is overexposed to excessive consumption of acidic and sugary food and drinks. The loss of enamel, if left untreated, can lead to various tooth conditions including stains, fractures, sensitivity, and translucence. Once tooth enamel is damaged, it cannot be brought back. Therefore, thorough studies on how enamel erosion starts and develops, especially at the initial stages, are of high scientific and clinical relevance for dental health maintenance. A research team led by Professor Seungbum Hong from the Department of Materials Science and Engineering at KAIST reported a new method of applying atomic force microscopy (AFM) techniques to study the nanoscale characterization of this early stage of enamel erosion. This study was introduced in the Journal of the Mechanical Behavior of Biomedical Materials (JMBBM) on June 29. AFM is a very-high-resolution type of scanning probe microscopy (SPM), with demonstrated resolution on the order of fractions of a nanometer (nm) that is equal to one billionth of a meter. AFM generates images by scanning a small cantilever over the surface of a sample, and this can precisely measure the structure and mechanical properties of the sample, such as surface roughness and elastic modulus. The co-lead authors of the study, Dr. Panpan Li and Dr. Chungik Oh, chose three commercially available popular beverages, Coca-Cola®, Sprite®, and Minute Maid® orange juice, and immersed tooth enamel in these drinks over time to analyze their impacts on human teeth and monitor the etching process on tooth enamel. Five healthy human molars were obtained from volunteers between age 20 and 35 who visited the KAIST Clinic. After extraction, the teeth were preserved in distilled water before the experiment. The drinks were purchased and opened right before the immersion experiment, and the team utilized AFM to measure the surface topography and elastic modulus map. The researchers observed that the surface roughness of the tooth enamel increased significantly as the immersion time increased, while the elastic modulus of the enamel surface decreased drastically. It was demonstrated that the enamel surface roughened five times more when it was immersed in beverages for 10 minutes, and that the elastic modulus of tooth enamel was five times lower after five minutes in the drinks. Additionally, the research team found preferential etching in scratched tooth enamel. Brushing your teeth too hard and toothpastes with polishing particles that are advertised to remove dental biofilms can cause scratches on the enamel surface, which can be preferential sites for etching, the study revealed. Professor Hong said, “Our study shows that AFM is a suitable technique to characterize variations in the morphology and mechanical properties of dental erosion quantitatively at the nanoscale level.” This work was supported by the National Research Foundation (NRF), the Ministry of Science and ICT (MSIT), and the KUSTAR-KAIST Institute of Korea. A dentist at the KAIST Clinic, Dr. Suebean Cho, Dr. Sangmin Shin from the Smile Well Dental, and Professor Kack-Kyun Kim at the Seoul National University School of Dentistry also collaborated in this project. < Figure 1. Tooth sample preparation process for atomic force microscopy (a, b, c), and an atomic force microscopy probe image (right). > < Figure 2. Changes in surface roughness (top) and modulus of elasticity (bottom) of tooth enamel exposed to popular beverages imaged by atomic force microscopy. > Publication: Li, P., et al. (2020) ‘Nanoscale effects of beverages on enamel surface of human teeth: An atomic force microscopy study’. Journal of the Mechanical Behavior of Biomedical Materials (JMBBM), Volume 110. Article No. 103930. Available online at https://doi.org/10.1016/j.jmbbm.2020.103930 Profile: Seungbum Hong, Ph.D. Associate Professor seungbum@kaist.ac.kr http://mii.kaist.ac.kr/ Materials Imaging and Integration (MII) Lab. Department of Materials Science and Engineering (MSE) Korea Advanced Institute of Science and Technology (KAIST) https://www.kaist.ac.kr Daejeon 34141, Korea (END)

-

Sulfur-Containing Polymer Generates High Refractiv..

Transparent polymer thin film with refractive index exceeding 1.9 to serve as new platform materials for high-end optical device applications Researchers reported a novel technology enhancing the high transparency of refractive polymer film via a one-step vapor deposition process. The sulfur-containing polymer (SCP) film produced by Professor Sung Gap Im’s research team at KAIST’s Department of Chemical and Biomolecular Engineering has exhibited excellent environmental stability and chemical resistance, which is highly desirable for its application in long-term optical device applications. The high refractive index exceeding 1.9 while being fully transparent in the entire visible range will help expand the applications of optoelectronic devices. The refractive index is a ratio of the speed of light in a vacuum to the phase velocity of light in a material, used as a measure of how much the path of light is bent when passing through a material. With the miniaturization of various optical parts used in mobile devices and imaging, demand has been rapidly growing for high refractive index transparent materials that induce more light refraction with a thin film. As polymers have outstanding physical properties and can be easily processed in various forms, they are widely used in a variety of applications such as plastic eyeglass lenses. However, there have been very few polymers developed so far with a refractive index exceeding 1.75, and existing high refractive index polymers require costly materials and complicated manufacturing processes. Above all, core technologies for producing such materials have been dominated by Japanese companies, causing long-standing challenges for Korean manufacturers. Securing a stable supply of high-performance, high refractive index materials is crucial for the production of optical devices that are lighter, more affordable, and can be freely manipulated. The research team successfully manufactured a whole new polymer thin film material with a refractive index exceeding 1.9 and excellent transparency, using just a one-step chemical reaction. The SCP film showed outstanding optical transparency across the entire visible light region, presumably due to the uniformly dispersed, short-segment polysulfide chains, which is a distinct feature unachievable in polymerizations with molten sulfur. < Figure 1. A schematic illustration showing the co-polymerization of vaporized sulfur to synthesize the high refractive index thin film. > The team focused on the fact that elemental sulfur is easily sublimated to produce a high refractive index polymer by polymerizing the vaporized sulfur with a variety of substances. This method suppresses the formation of overly long S-S chains while achieving outstanding thermal stability in high sulfur concentrations and generating transparent non-crystalline polymers across the entire visible spectrum. Due to the characteristics of the vapor phase process, the high refractive index thin film can be coated not just on silicon wafers or glass substrates, but on a wide range of textured surfaces as well. We believe this thin film polymer is the first to have achieved an ultrahigh refractive index exceeding 1.9. Professor Im said, “This high-performance polymer film can be created in a simple one-step manner, which is highly advantageous in the synthesis of SCPs with a high refractive index. This will serve as a platform material for future high-end optical device applications.” This study, in collaboration with research teams from Seoul National University and Kyung Hee University, was reported in Science Advances. (Title: One-Step Vapor-Phase Synthesis of Transparent High-Refractive Index Sulfur-Containing Polymers) This research was supported by the Ministry of Science and ICT’s Global Frontier Project (Center for Advanced Soft-Electronics), Leading Research Center Support Program (Wearable Platform Materials Technology Center), and Basic Science Research Program (Advanced Research Project).

-

Quantum Classifiers with Tailored Quantum Kernel

Quantum information scientists have introduced a new method for machine learning classifications in quantum computing. The non-linear quantum kernels in a quantum binary classifier provide new insights for improving the accuracy of quantum machine learning, deemed able to outperform the current AI technology. The research team led by Professor June-Koo Kevin Rhee from the School of Electrical Engineering, proposed a quantum classifier based on quantum state fidelity by using a different initial state and replacing the Hadamard classification with a swap test. Unlike the conventional approach, this method is expected to significantly enhance the classification tasks when the training dataset is small, by exploiting the quantum advantage in finding non-linear features in a large feature space. Quantum machine learning holds promise as one of the imperative applications for quantum computing. In machine learning, one fundamental problem for a wide range of applications is classification, a task needed for recognizing patterns in labeled training data in order to assign a label to new, previously unseen data; and the kernel method has been an invaluable classification tool for identifying non-linear relationships in complex data. More recently, the kernel method has been introduced in quantum machine learning with great success. The ability of quantum computers to efficiently access and manipulate data in the quantum feature space can open opportunities for quantum techniques to enhance various existing machine learning methods. The idea of the classification algorithm with a nonlinear kernel is that given a quantum test state, the protocol calculates the weighted power sum of the fidelities of quantum data in quantum parallel via a swap-test circuit followed by two single-qubit measurements (see Figure 1). This requires only a small number of quantum data operations regardless of the size of data. The novelty of this approach lies in the fact that labeled training data can be densely packed into a quantum state and then compared to the test data. The KAIST team, in collaboration with researchers from the University of KwaZulu-Natal (UKZN) in South Africa and Data Cybernetics in Germany, has further advanced the rapidly evolving field of quantum machine learning by introducing quantum classifiers with tailored quantum kernels.This study was reported at npj Quantum Information in May. The input data is either represented by classical data via a quantum feature map or intrinsic quantum data, and the classification is based on the kernel function that measures the closeness of the test data to training data. Dr. Daniel Park at KAIST, one of the lead authors of this research, said that the quantum kernel can be tailored systematically to an arbitrary power sum, which makes it an excellent candidate for real-world applications. Professor Rhee said that quantum forking, a technique that was invented by the team previously, makes it possible to start the protocol from scratch, even when all the labeled training data and the test data are independently encoded in separate qubits. Professor Francesco Petruccione from UKZN explained, “The state fidelity of two quantum states includes the imaginary parts of the probability amplitudes, which enables use of the full quantum feature space.” To demonstrate the usefulness of the classification protocol, Carsten Blank from Data Cybernetics implemented the classifier and compared classical simulations using the five-qubit IBM quantum computer that is freely available to public users via cloud service. “This is a promising sign that the field is progressing,” Blank noted. < Figure1:Aquantum circuit for implementing the non-linear kernel-based binary classification. > Link to download the full-text paper: https://www.nature.com/articles/s41534-020-0272-6 -Profile Professor June-Koo Kevin Rhee rhee.jk@kaist.ac.kr Professor, School of Electrical Engineering Director, ITRC of Quantum Computing for AI KAIST Daniel Kyungdeock Park kpark10@kaist.ac.kr Research Assistant Professor School of Electrical Engineering KAIST

-

A New Strategy for Early Evaluations of CO2 Utiliz..

- A three-step evaluation procedure based on technology readiness levels helps find the most efficient technology before allocating R&D manpower and investments in CO2 utilization technologies. - < Professor Jay Hyung Lee (left) and Dr. Kosan Roh (right) > Researchers presented a unified framework for early-stage evaluations of a variety of emerging CO2 utilization (CU) technologies. The three-step procedure allows a large number of potential CU technologies to be screened in order to identify the most promising ones, including those at low level of technical maturity, before allocating R&D manpower and investments. When evaluating new technology, various aspects of the new technology should be considered. Its feasibility, efficiency, economic competitiveness, and environmental friendliness are crucial, and its level of technical maturity is also an important component for further consideration. However, most technology evaluation procedures are data-driven, and the amount of reliable data in the early stages of technology development has been often limited. A research team led by Professor Jay Hyung Lee from the Department of Chemical and Biomolecular Engineering at KAIST proposed a new procedure for evaluating the early development stages of emerging CU technologies which are applicable at various technology readiness levels (TRLs). The procedure obtains performance indicators via primary data preparation, secondary data calculation, and performance indicator calculation, and the lead author of the study Dr. Kosan Roh and his colleagues presented a number of databases, methods, and computer-aided tools that can effectively facilitate the procedure. The research team demonstrated the procedure through four case studies involving novel CU technologies of different types and at various TRLs. They confirmed the electrochemical CO2 reduction for the production of ten chemicals, the co-electrolysis of CO2 and water for ethylene production, the direct oxidation of CO2 -based methanol for oxymethylene dimethyl production, and the microalgal biomass co-firing for power generation. The expected range of the performance indicators for low TRL technologies is broader than that for high TRL technologies, however, it is not the case for high TRL technologies as they are already at an optimized state. The research team believes that low TRL technologies will be significantly improved through future R&D until they are commercialized. “We plan to develop a systematic approach for such a comparison to help avoid misguided decision-making,” Professor Lee explained. Professor Lee added, “This procedure allows us to conduct a comprehensive and systematic evaluation of new technology. On top of that, it helps make efficient and reliable assessment possible.” The research team collaborated with Professor Alexander Mitsos, Professor André Bardow, and Professor Matthias Wessling at RWTH Aachen University in Germany. Their findings were reported in Green Chemistry on May 21. This work was supported by the Korea Carbon Capture and Sequestration R&D Center (KCRC). < Figure 1. Overview of the procedure for the early-stage evaluation of CU technologies > < Figure 2. Evaluation results of ten electrochemical CO2 conversion technologies > Publications: Roh, K., et al. (2020) ‘Early-stage evaluation of emerging CO2 utilization technologies at low technology readiness levels’ Green Chemistry. Available online at https://doi.org/10.1039/c9gc04440j Profile: Jay Hyung Lee, Ph.D. Professor jayhlee@kaist.ac.kr http://lense.kaist.ac.kr/ Laboratory for Energy System Engineering (LENSE) Department of Chemical and Biomolecular Engineering KAIST https://www.kaist.ac.kr Daejeon 34141, Korea (END)

-

Unravelling Complex Brain Networks with Automated ..

-Automated 3-D brain imaging data analysis technology offers more reliable and standardized analysis of the spatial organization of complex neural circuits.- KAIST researchers developed a new algorithm for brain imaging data analysis that enables the precise and quantitative mapping of complex neural circuits onto a standardized 3-D reference atlas. Brain imaging data analysis is indispensable in the studies of neuroscience. However, analysis of obtained brain imaging data has been heavily dependent on manual processing, which cannot guarantee the accuracy, consistency, and reliability of the results. Conventional brain imaging data analysis typically begins with finding a 2-D brain atlas image that is visually similar to the experimentally obtained brain image. Then, the region-of-interest (ROI) of the atlas image is matched manually with the obtained image, and the number of labeled neurons in the ROI is counted. Such a visual matching process between experimentally obtained brain images and 2-D brain atlas images has been one of the major sources of error in brain imaging data analysis, as the process is highly subjective, sample-specific, and susceptible to human error. Manual analysis processes for brain images are also laborious, and thus studying the complete 3-D neuronal organization on a whole-brain scale is a formidable task. To address these issues, a KAIST research team led by Professor Se-Bum Paik from the Department of Bio and Brain Engineering developed new brain imaging data analysis software named ‘AMaSiNe (Automated 3-D Mapping of Single Neurons)’, and introduced the algorithm in the May 26 issue of Cell Reports. AMaSiNe automatically detects the positions of single neurons from multiple brain images, and accurately maps all the data onto a common standard 3-D reference space. The algorithm allows the direct comparison of brain data from different animals by automatically matching similar features from the images, and computing the image similarity score. This feature-based quantitative image-to-image comparison technology improves the accuracy, consistency, and reliability of analysis results using only a small number of brain slice image samples, and helps standardize brain imaging data analyses. Unlike other existing brain imaging data analysis methods, AMaSiNe can also automatically find the alignment conditions from misaligned and distorted brain images, and draw an accurate ROI, without any cumbersome manual validation process. AMaSiNe has been further proved to produce consistent results with brain slice images stained utilizing various methods including DAPI, Nissl, and autofluorescence. The two co-lead authors of this study, Jun Ho Song and Woochul Choi, exploited these benefits of AMaSiNe to investigate the topographic organization of neurons that project to the primary visual area (VISp) in various ROIs, such as the dorsal lateral geniculate nucleus (LGd), which could hardly be addressed without proper calibration and standardization of the brain slice image samples. In collaboration with Professor Seung-Hee Lee's group of the Department of Biological Science, the researchers successfully observed the 3-D topographic neural projections to the VISp from LGd, and also demonstrated that these projections could not be observed when the slicing angle was not properly corrected by AMaSiNe. The results suggest that the precise correction of a slicing angle is essential for the investigation of complex and important brain structures. AMaSiNe is widely applicable in the studies of various brain regions and other experimental conditions. For example, in the research team’s previous study jointly conducted with Professor Yang Dan’s group at UC Berkeley, the algorithm enabled the accurate analysis of the neuronal subsets in the substantia nigra and their projections to the whole brain. Their findings were published in Science on January 24. AMaSiNe is of great interest to many neuroscientists in Korea and abroad, and is being actively used by a number of other research groups at KAIST, MIT, Harvard, Caltech, and UC San Diego. Professor Paik said, “Our new algorithm allows the spatial organization of complex neural circuits to be found in a standardized 3-D reference atlas on a whole-brain scale. This will bring brain imaging data analysis to a new level.” He continued, “More in-depth insights for understanding the function of brain circuits can be achieved by facilitating more reliable and standardized analysis of the spatial organization of neural circuits in various regions of the brain.” This work was supported by KAIST and the National Research Foundation of Korea (NRF). < Figure 1. Design of AMaSiNe to Overcome the Limits of Conventional Mouse Brain Imaging Data Analysis > < Figure 2. Localization of Brain Slice Images onto the Standard Brain Atlas > < Image. Standardized 3-D Mouse Brain > Figure and Image Credit: Professor Se-Bum Paik, KAIST Figure and Image Usage Restrictions: News organizations may use or redistribute these figures and images, with proper attribution, as part of news coverage of this paper only. Publication: Song, J. H., et al. (2020). Precise Mapping of Single Neurons by Calibrated 3D Reconstruction of Brain Slices Reveals Topographic Projection in Mouse Visual Cortex. Cell Reports. Volume 31, 107682. Available online at https://doi.org/10.1016/j.celrep.2020.107682 Profile: Se-Bum Paik Assistant Professor sbpaik@kaist.ac.kr http://vs.kaist.ac.kr/ VSNN Laboratory Department of Bio and Brain Engineering Program of Brain and Cognitive Engineering http://kaist.ac.kr Korea Advanced Institute of Science and Technology (KAIST) Daejeon, Republic of Korea (END)

-

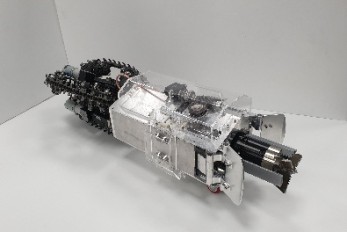

‘Mole-bot’ Optimized for Underground and Space Exp..

Biomimetic drilling robot provides new insights into the development of efficient drilling technologies < From let: Professor Hyun Myung, PhD candidate Junseok Lee, researcher Christian Tirtawardhana, and PhD candiate Hyunjun Lim > Mole-bot, a drilling biomimetic robot designed by KAIST, boasts a stout scapula, a waist inclinable on all sides, and powerful forelimbs. Most of all, the powerful torque from the expandable drilling bit mimicking the chiseling ability of a mole’s front teeth highlights the best feature of the drilling robot. The Mole-bot is expected to be used for space exploration and mining for underground resources such as coalbed methane and Rare Earth Elements (REE), which require highly advanced drilling technologies in complex environments. The research team, led by Professor Hyun Myung from the School of Electrical Engineering, found inspiration for their drilling bot from two striking features of the African mole-rat and European mole. “The crushing power of the African mole-rat’s teeth is so powerful that they can dig a hole with 48 times more power than their body weight. We used this characteristic for building the main excavation tool. And its expandable drill is designed not to collide with its forelimbs,” said Professor Myung. The 25-cm wide and 84-cm long Mole-bot can excavate three times faster with six times higher directional accuracy than conventional models. The Mole-bot weighs 26 kg. After digging, the robot removes the excavated soil and debris using its forelimbs. This embedded muscle feature, inspired by the European mole’s scapula, converts linear motion into a powerful rotational force. For directional drilling, the robot’s elongated waist changes its direction 360° like living mammals. For exploring underground environments, the research team developed and applied new sensor systems and algorithms to identify the robot’s position and orientation using graph-based 3D Simultaneous Localization and Mapping (SLAM) technology that matches the Earth’s magnetic field sequence, which enables 3D autonomous navigation underground. According to Market & Market’s survey, the directional drilling market in 2016 is estimated to be 83.3 billion USD and is expected to grow to 103 billion USD in 2021. The growth of the drilling market, starting with the Shale Revolution, is likely to expand into the future development of space and polar resources. As initiated by Space X recently, more attention for planetary exploration will be on the rise and its related technology and equipment market will also increase. The Mole-bot is a huge step forward for efficient underground drilling and exploration technologies. Unlike conventional drilling processes that use environmentally unfriendly mud compounds for cleaning debris, Mole-bot can mitigate environmental destruction. The researchers said their system saves on cost and labor and does not require additional pipelines or other ancillary equipment. “We look forward to a more efficient resource exploration with this type of drilling robot. We also hope Mole-bot will have a very positive impact on the robotics market in terms of its extensive application spectra and economic feasibility,” said Professor Myung. This research, made in collaboration with Professor Jung-Wuk Hong and Professor Tae-Hyuk Kwon’s team in the Department of Civil and Environmental Engineering for robot structure analysis and geotechnical experiments, was supported by the Ministry of Trade, Industry and Energy’s Industrial Technology Innovation Project. Profile Professor Hyun Myung Urban Robotics Lab http://urobot.kaist.ac.kr/ School of Electrical Engineering KAIST